Shipping like Claude Code ⚡

Some reflections on the recent deep dive about how the Claude Code team works.

Hey, Luca here, welcome to a monthly free edition of Refactoring! To access all our articles, library, and community, subscribe to the full version:

Resources: 🏛️ Library • 💬 Community • 🎙️ Podcast • ❓ About

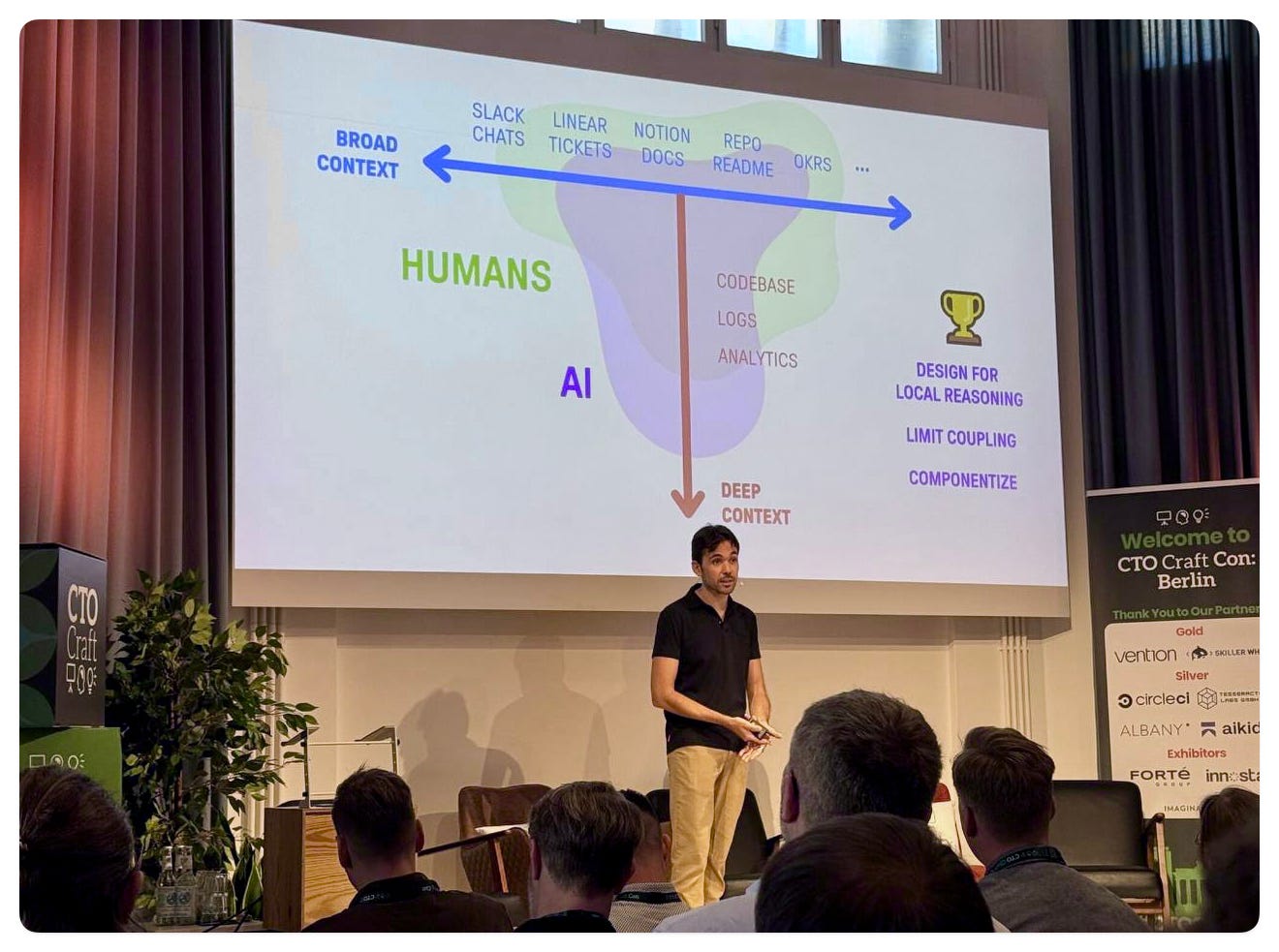

Hi everyone! Today’s edition will be lighter than usual because this week I have been largely away! I took a couple of days off and then went to Berlin to keynote at the CTO Craft Con. The conference has been a blast, and I am grateful to the organizers for inviting me.

On stage I brought up one of my recent mantras, which is: what’s good for humans is good for AI. And I also argued that to get the most out of AI we’ll need to make engineers own more things — not just do more of the same.

Teams that are winning today are those where individuals are able to prototype things from top to bottom, and where people rarely get blocked by others.

Coincidentally, just yesterday Gergely published an awesome piece about How Claude Code is Built. I read it on my flight back to Rome, and it feels like an extreme version of the above.

So today I would like to comment on it, and try to answer the obvious question: can every team work like this?

Spoiler: to me the answer is no — but we’ll get there.

Disclaimer: the original article is for paid subscribers of The Pragmatic Engineer, so to respect Gergely’s work I will just comment on the ideas included in the free part, which is a lot of stuff anyway! I can also say I am a happy paid subscriber of TPE, so consider subscribing!

So how does the Claude Code team work? It turns out, Claude Code is used a lot to… write Claude Code, which was to be expected. Then, here are the three most interesting bits to me:

They ship extremely fast — each engineer creates on average ~5 releases per day. The team is made of ~10 engineers, which gets them to 50-60 releases per day.

Engineers prototype full features — which get tested internally by the team before they get to production.

AI is used a lot for code quality — from TDD to first-pass code reviews.

So let’s comment on these:

🚢 How do you ship that fast?

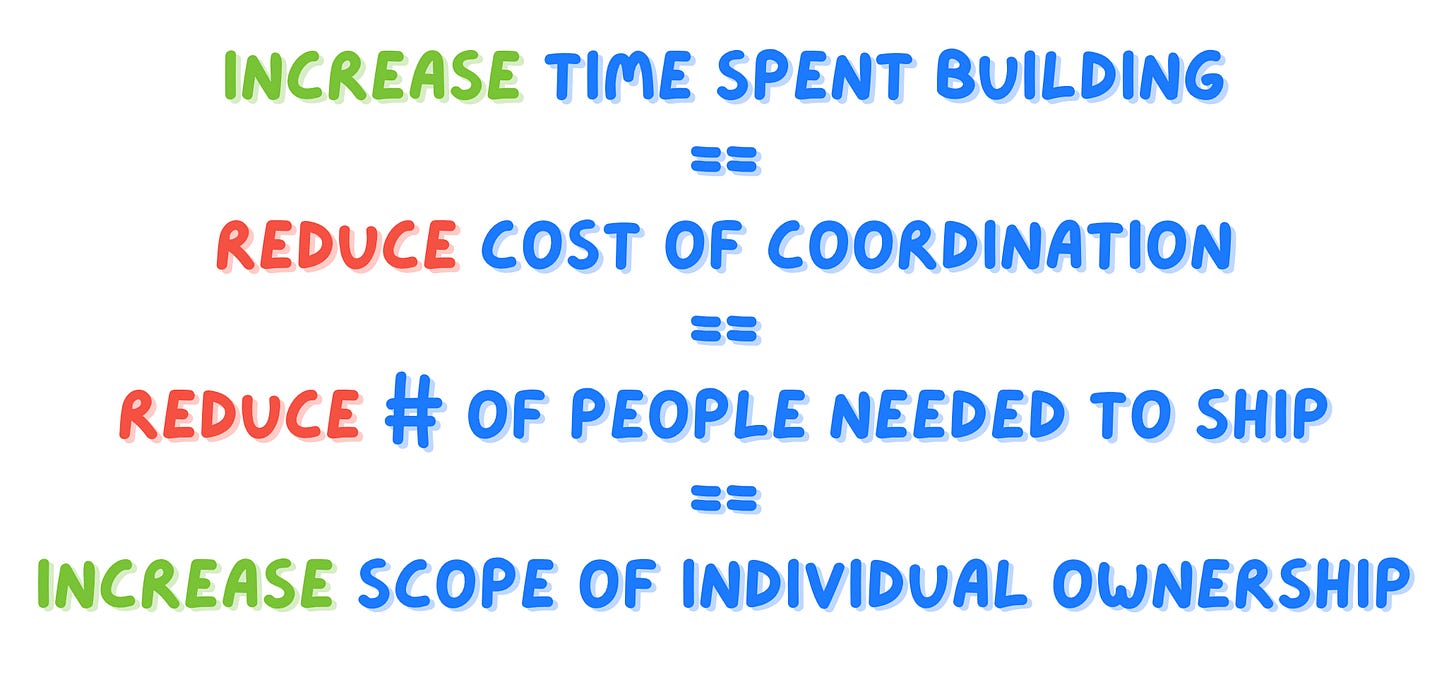

The only way an engineer can ship 5 different things in a day is that they don’t need to spend a lot of time coordinating with others — which is evident from Gergely’s write-up.

On the Claude Code team, individuals often take on full features, build a series of prototypes by themselves, settle on what feels right to their own judgment, and share it with others for review.

What stops the rest of us from working like this? It’s usually two barriers:

🔨 Tech barrier — single engineers can’t build the whole thing.

🎨 Product barrier — single engineers can’t figure out what to build, product and design-wise.

Most teams have some version of these barriers and it’s important to invest in making them smaller over time. In my experience this has to be done intentionally because, otherwise, complexity is like entropy: it only grows, making the barriers eventually insurmountable, in turn making your team slow.

I also believe these need to be addressed exactly in this order. First you make engineers more proficient on the whole tech stack, and then you make them more proficient in product. That’s because the former is useful also without the latter, while the opposite is less true.

The other reason why this order matters is that, in most cases, the tech barrier is easier to overcome than the product one: making e.g. a frontend engineer somewhat proficient in backend is easier than making them more proficient in product, for at least two factors:

Tech skills are all adjacent to each other — a lot of the mental models directly transpose to other levels of the stack, plus AI helps a lot by providing a layer of universal basic proficiency across everything.

Product is not just about skills — it is about being continuously exposed to users’ problems so that you can find solutions for them, and evaluate them correctly. To make this happen you don’t need (only) skills: you need a process.

So, while in many cases the tech journey to increase engineers’ scope looks straightforward (which doesn’t necessarily mean easy)—improve tooling, make the stack simpler, invest in good testing, etc—with product, people don’t know where to begin with.

We wrote several times about this in the past, most recently with the guys at Atono. However, every team’s journey is different, and it’s already meaningful to agree that product shouldn’t be confined to PMs and designers, so you can gradually trend in the right direction.

Now, in this messy world, some teams have an unfair advantage: their users are engineers. It’s not a coincidence that the best product engineering stories we've covered have consistently come from dev tools: Claude Code, Atono, Bucket, or Convex. Being a user of your product gives you instant product proficiency, without the need to be overly exposed to customer success stories, domain experts, and more.

This is at the core of how the Claude Code team moves so fast, but there is also another sneaky factor, which is possibly even rarer: UI design is almost non-existent. Or, better, it exists but it is fully understood by engineers, since we are talking of a CLI tool.

All together, it means engineers can come up with good product ideas + go for them without the need to coordinate with almost anyone. Add to that an obvious good understanding of how to elevate work with AI, and you get the holy-grail team.

🔍 AI for code quality

Now, if the product angle of how Claude Code works is hard to copy, I believe we can and should absolutely steal how they approach code quality, and how they get help from AI on this.

In fact, while the main narrative that is being pushed today is that AI reduces code quality, we already argued last week that we should use it to improve quality instead.

So here are some basic ideas that are very easy to implement:

Enforce automated testing on everything — because AI now makes it 10x cheaper.

Run security and static analysis checks in the IDE — to get real time feedback on the code being written, via AI plugins. You can also use quality-focused MCPs, like Codacy, Context7, and more, to steer the AI-generated code automatically.

Do AI code reviews — make AI have a first pass on code reviews. Which means humans can focus on higher-value comments, instead of looking for missing tests or obvious code smells. CodeRabbit is fantastic for this.

This is all plug-and-play and doesn’t require you to change your workflows, so there are zero excuses not to work like this right now.

That’s it for today! It’s an exciting time and it’s great to hear stories about how modern startups work. As always, it’s important not to cargo-cult anything but to understand how and why things work the way they do, and what can be useful for us vs what we can’t replicate.

I wish you a great week!

Sincerely 👋

Luca

Thanks for the namecheck -- the easiest way to start with Codacy in your IDE is to search the Codacy extension in your IDE which will automagically do all the necessary MCP configuration but also add rules to ensure that the tools actually get run. Check us out on VSCode (https://marketplace.visualstudio.com/items?itemName=codacy-app.codacy ) and we also just launched JetBrains compatibility too ( https://plugins.jetbrains.com/plugin/23924-codacy ).