What's good for humans is good for AI ❤️

The single most important mental model to get the most out of AI.

It’s been a couple of months now that a lot of my attention has been focused on a single topic: how good engineering teams are using AI.

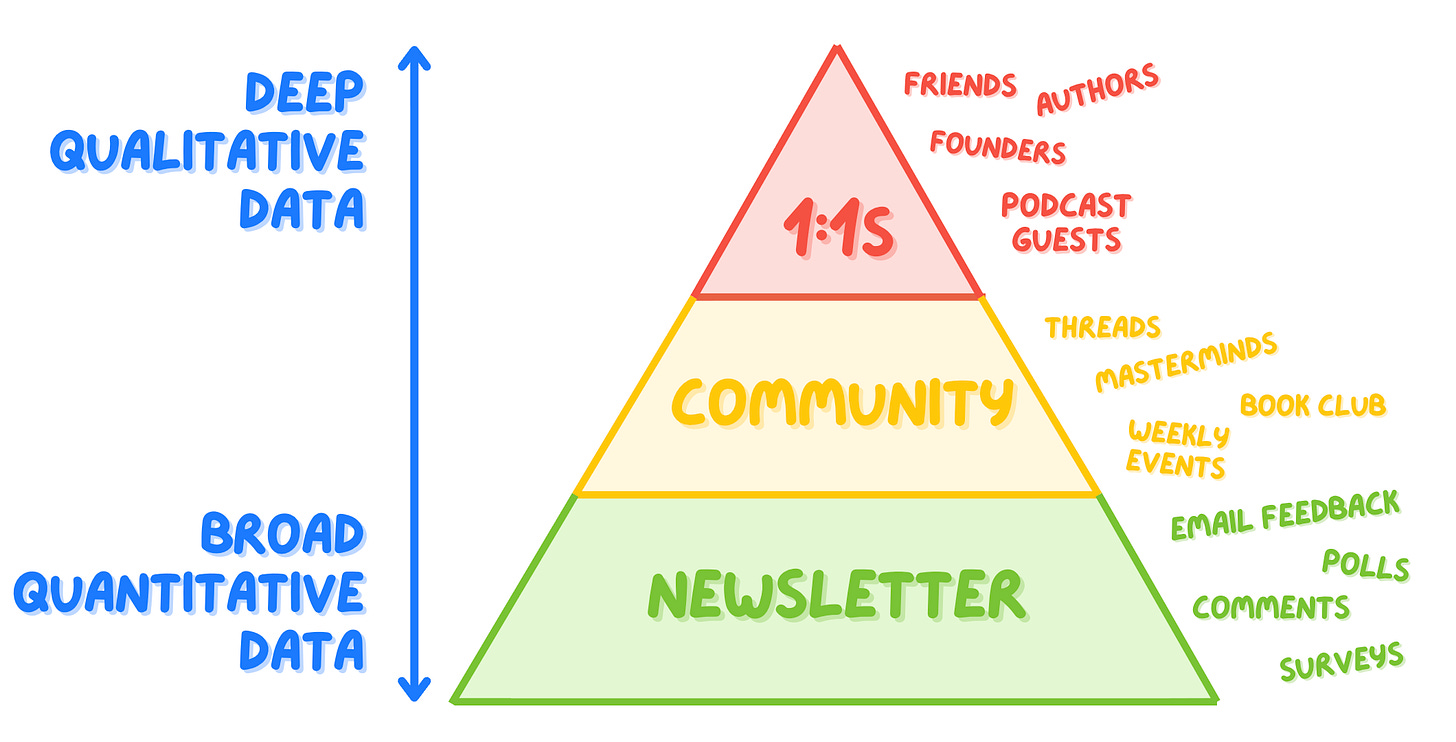

I have been exploring this from many angles:

We are wrapping up our newsletter survey about it — and the full industry report will come out in a couple of weeks.

We explored various angles of it in community threads and events.

I had a lot of 1:1 conversations about this topic with podcast guests and real-world engineering teams from AI-first companies like Coderabbit, Unblocked, Augment, Convex, and more.

Last but not least, I got my hands dirty by coding a lot recently, and making good progress on my own side projects.

All in all it’s like a big pyramid of information that goes from broad, quantitative data points, to deep, qualitative (but also more anecdotal) ones 👇

So, if you are a tech leader who’s trying to figure out how they should think about AI, and what to make out of it, here are the main things I believe you should know:

📉 Progress is slowing down — so this is a good time to take stock of where we are.

🤷 Productivity data is inconclusive — as it has always been.

🤝 What’s good for humans is good for AI — there is no secret formula… fortunately.

🔬 AI should improve code quality — not threaten it.

🏯 A lot of teams need to be redesigned — to get the most out of AI.

There is a lot to unpack, so let’s dive in!

📉 Progress is slowing down

Over the past two years, whenever I wrote an article about AI I made the same joke that those ideas ought to become outdated in a couple of weeks or so.

Well, today it doesn’t feel that way anymore. In fact, we can arguably say that AI progress — in coding, but also in general — has significantly slowed down.

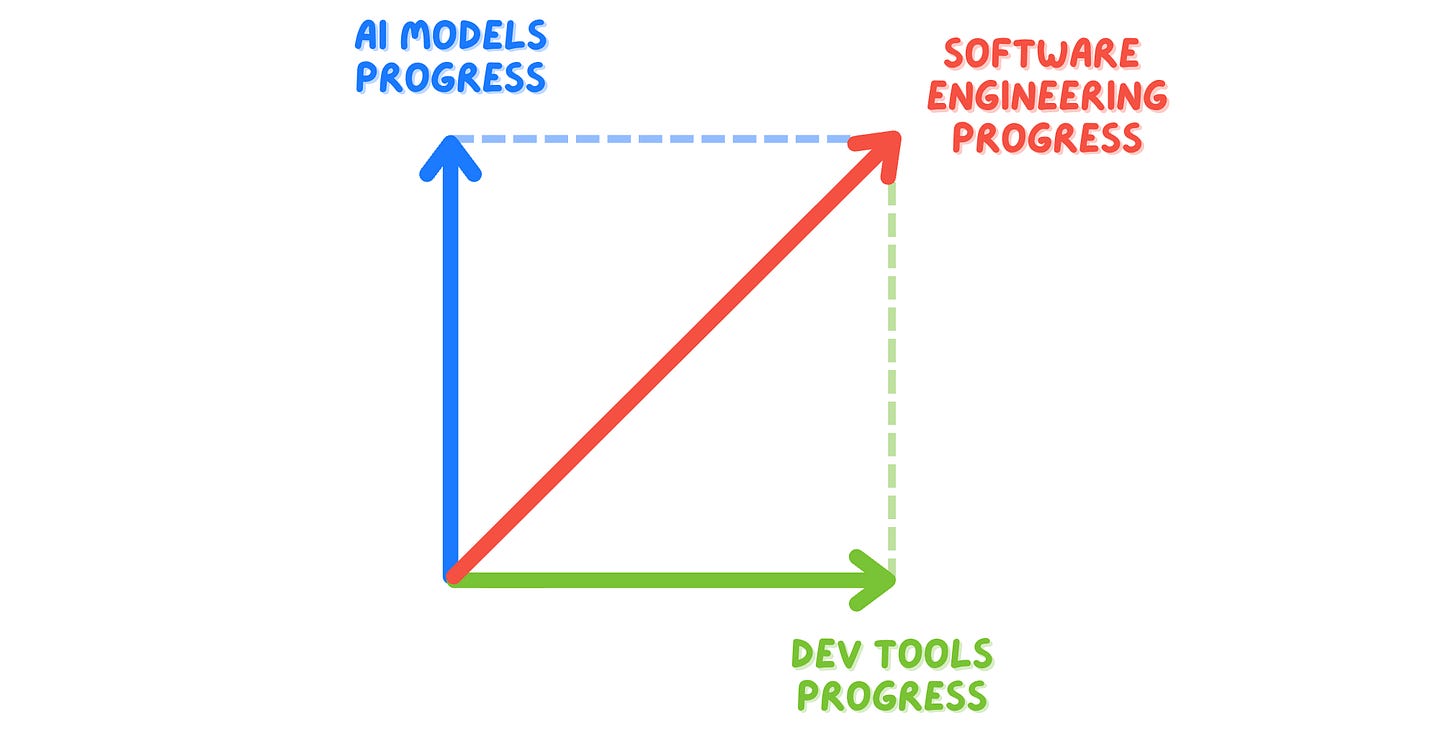

What do we mean by progress? For software engineering, AI progress is mainly driven by two vectors: frontier models and dev tools.

These two are not completely orthogonal: improvements in the former obviously drive changes in the latter, but it’s also true that we are discovering things about tools ergonomics on our own, in a way that doesn’t always feel tied to models.

In fact, over the past year we have gone through different paradigms, the three main ones today being:

AI-powered IDEs → like Cursor and Augment

Autonomous Agents → like Devin

CLI tools → like Claude Code

Boundaries are also fuzzy as each tool often tries to do more than one thing.

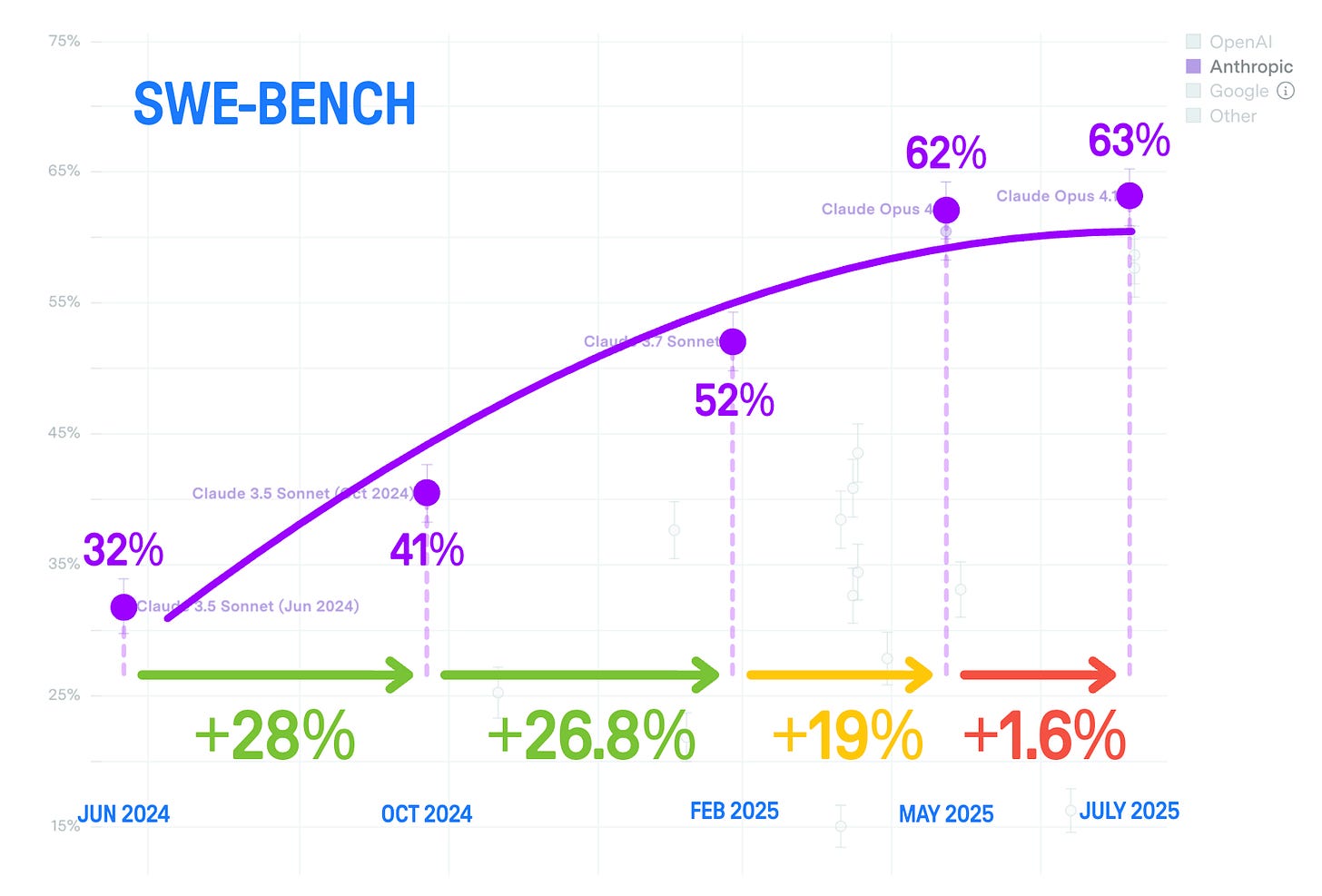

In the meantime, AI models seem to be plateauing, or at least getting into the land of diminishing returns. Anthropic models, which have always consistently topped the SWE-Bench (the most reputable coding benchmark), have been following a trend of increasingly smaller, shorter, and more incremental iterations. OpenAI’s latest GPT5 follows the same trend.

This is to say: today is probably a good time to stop and take stock of where we are. It’s hard to expect AGI or a singularity moment to be coming anytime soon, so let’s look at what we have now.

Starting with data 👇

🤷 Productivity data is inconclusive

I looked at all the available data and research about the impact of AI on teams’ (and individuals’) productivity, and the truth is data doesn’t say much.

Or, better, whatever your bias is about this matter, you can find data that supports it.

Let’s start with some individual examples:

🟢 “I am 5x faster at coding because of AI” — Salvatore Sanfilippo, creator of Redis, on the Refactoring Podcast

Salvatore is one of the most successful open source authors of all time, writes incredibly complex, low level system code, and is also an extremely grounded and practical person. In other words, I trust him.

🟢 “90% of our code is written by AI” — Dan Shipper, founder of Every, on the Refactoring Podcast

Every does 1M+ ARR and ships working, revenue-generating software, like Spiral and Cora. Dan came on the podcast and explained how they largely vibe-code everything.

There are many individual stories like this, but when you look at more aggregated data, the outlook is less promising:

🟡 “Productivity gains from AI that we see in teams are ~5-10%” — Abi Noda, CEO of DX, on the Refactoring Podcast.

Abi sees thousands of codebases through his work at DX, and helps some of the best teams in the world.

🔴 “AI is hurting delivery performance” — The State of DevOps, DORA

The DORA team pointed to negative AI impact: teams that make regular use of AI get -1.5% in delivery throughput, and -7.2% in delivery stability (yikes!).

🔴 “AI makes developers 19% slower” — [Becker et al.] Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.

The final nail in the coffin is this widely re-shared research from METR, which even though it looks at a very small sample (19 developers), reports some of the worst-looking numbers on AI productivity I have ever seen.

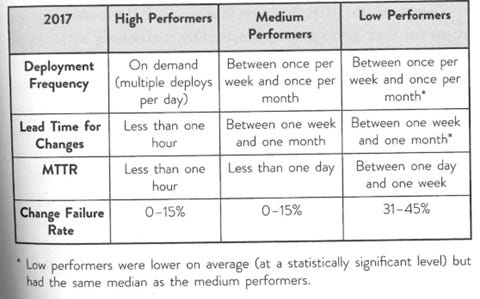

So, the data looks inconclusive, but should this really be surprising? Software delivery performance has always had a lot of variance. If we think at Accelerate, arguably the most influential work of all time about this, the gaps it found between elite and average teams are often staggering. We are talking 50x values on several measures 👇

As an industry, we have never found much consensus on many engineering practices, and there have always been teams able to outperform others by (several) orders of magnitudes. So it feels just natural that, with AI as well, we have teams reporting wildly different outcomes.

“When the data and the anecdotes disagree, the anecdotes are usually right”

— Jeff Bezos

Which also means, average aggregated data will never say much, but it’s probably more helpful to speak to individual teams and figure out how they are doing things.

And this is what I have done 👇

🤝 What’s good for humans is good for AI

In recent months I have collected stories from podcast guests, plus from real world teams at AI-first companies like Unblocked, Augment, Convex, CodeRabbit, CodeScene, and more.

I went in with questions like how do you optimize this and that for AI, how do you make this AI-friendly, and so on. And the overwhelmingly common response is: there is no magic recipe. What’s good for humans is good for AI. Which is incredibly good news.

But what does it mean to be good for humans? Let’s unpack this into the four most important mental models that I noted: