Hey, Luca here, welcome to a weekly edition of the💡 Monday Ideas 💡 from Refactoring! To access all our articles, library, and community, subscribe to the full version:

Resources: 🏛️ Library • 💬 Community • 🎙️ Podcast • 📣 Advertise

Brought to you by Unblocked! 🔳

Every year, new AI tools promise to make developers more productive. But the biggest blocker to shipping software isn’t writing code — it’s finding the context to make decisions.

🔎 Devs lose 8+ hours per week hunting through tools.

⏳ 65% of developer time is wasted when context is scattered.

🙋♂️ Senior engineers get pinged 10+ times a week for trivial answers.

🚀 New hires take 6+ months to become fully productive

Unblocked turns scattered knowledge from GitHub, Slack, Jira, and Confluence into clear answers your team can trust. The result: faster onboarding, fewer interruptions, and autonomous teams that stay focused.

1) 🔨 Managers are back to coding

Last week we published our big industry report on how engineering teams are using AI — which we have been working on for more than three months!

An insight I am particularly fond of is that many engineering managers are back to coding because of AI, which shows both in overall numbers—coding is the #1 AI use case for managers —and in individual stories.

AI is helping managers do more coding in two ways:

🧠 It reduces the cognitive load — managers often retain good taste for what good code looks like, but are a bit rusty on implementation details. AI makes the latter less relevant.

🗓️ It fits coding into packed agendas — by letting terminal agents run while people are on meetings or doing something else.

Using agent’s to get coding done while I’m in meetings, and lowering the bar for automation.— Engineering Manager

We had to perform a large-scale renaming and reorganization of files in a Ruby codebase (with no IDE support). I cursor-churned for 65 minutes, but I was free to do something else.— CTO

You can find the full (free!) report below!

2) 📑 Broad context vs deep context

There is a lot of talk these days about context engineering, or how to give AI everything it needs to know to perform well.

This is an area where AI is legitimately a bit different than humans, but the conclusions—we’ll see—are similar.

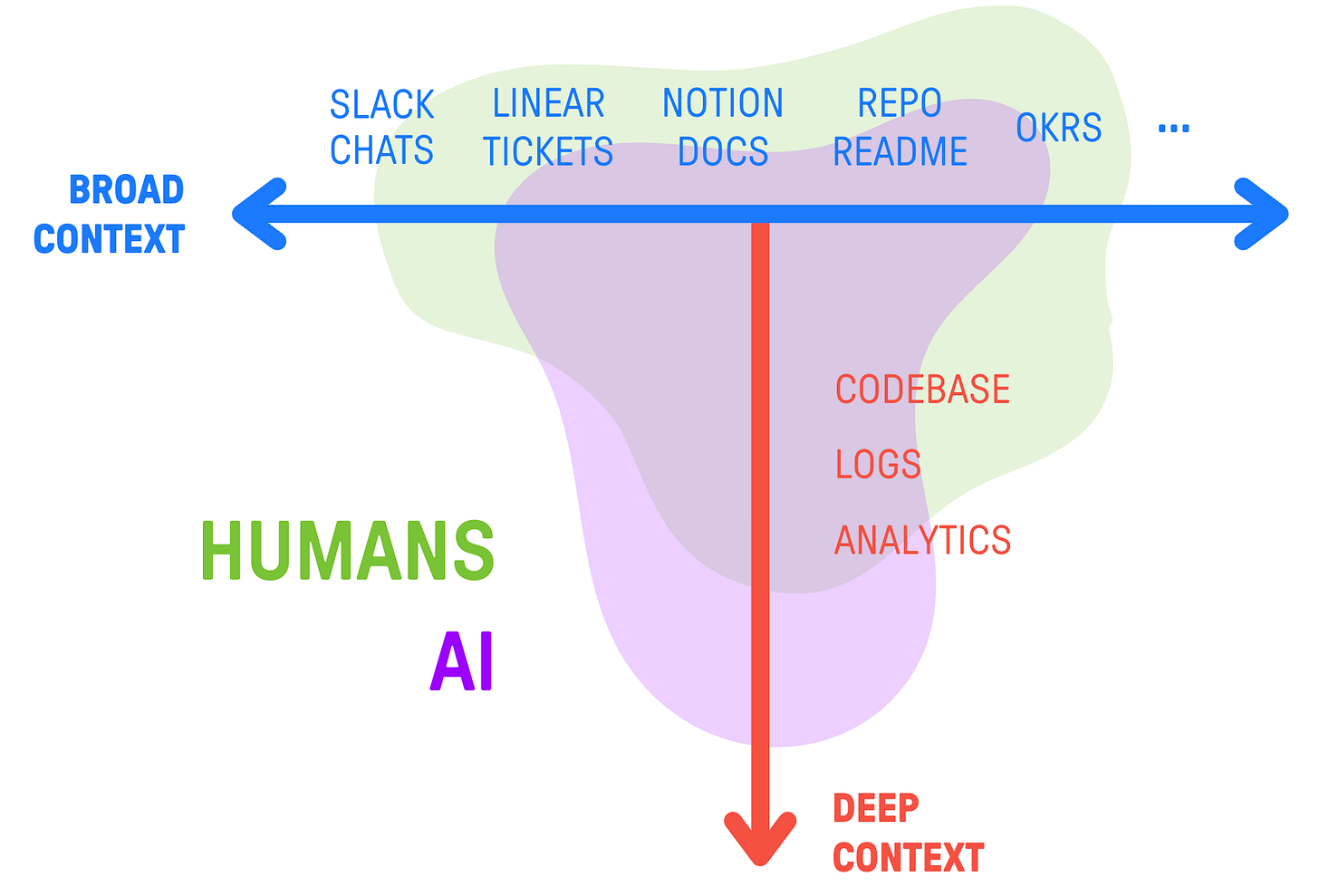

With some degree of simplification, when it comes to parsing—or putting together—info for some task, there are two types of context we work with:

↔️ Broad context — insights come from making sense of data coming from a variety of tools, like the team chat, ticketing, docs, OKRs, and more.

↕️ Deep context — insights come from the analysis of hard, data-heavy individual sources, like the codebase, logs, or analytics.

Humans are just great at broad context and at keeping all these different things in their heads. AI, instead, struggles with it, while it excels at working through individual big chunks of data, way better than we are capable of.

For sure, some of the AI imitations with processing broad context may just be tooling limitations: MCPs are clunky, RAG feels like early tech, and some sources are just usually not plugged in — but it is what it is, and we have to work with it.

So, today AI works best when you maximize local reasoning opportunities: make things self-contained, limit coupling, and make AI find everything in the same place.

This usually spawns from good design choices, like good componentization, keeping docs in the repo, and the evergreen low coupling + high cohesion.

We published a full guide on how to design your tech stack for AI in this recent newsletter edition 👇

3) 🎲 Avoid “resulting” in decision making

In August I interviewed Annie Duke, one of the world’s top experts in decision making and former world-class professional poker player.

We talked about many things, and one that stuck with me was the fallacy of resulting — that is judging decisions by their outcomes rather than the quality of the decision-making process itself.

Annie uses a game analogy to illustrate this:

♟️ Chess == Pure skill, no luck — Outcomes directly reflect decision quality

🧮 Backgammon == Skill + dice — Luck makes feedback loops messier

🃏 Poker == Skill + luck + hidden information — closest to real-world decision-making

The error that we often make is to treat everything like chess. We ignore the fact that there is uncertainty involved and we just assume:

Someone has a good outcome → they played well

Someone has a bad outcome → they must have made bad decisions.

This happens constantly in business. The “resulting” bias creates a major impediment to learning because it prevents us from properly closing feedback loops and understanding the true reasons behind outcomes.

Here is the full interview with Annie:

You can also find it on 🎧 Spotify and 📬 Substack

And that’s it for today! If you are finding this newsletter valuable, consider doing any of these:

1) 🔒 Subscribe to the full version — if you aren’t already, consider becoming a paid subscriber. 1700+ engineers and managers have joined already! Learn more about the benefits of the paid plan here.

2) 📣 Advertise with us — we are always looking for great products that we can recommend to our readers. If you are interested in reaching an audience of tech executives, decision-makers, and engineers, you may want to advertise with us 👇

If you have any comments or feedback, just respond to this email!

I wish you a great week! ☀️

Luca

Thanks for sharing your thoughts, Luca! I just graduated college and I'm building a documentation startup. It's tough to know which context matters most for prioritizing features and improvements. Any advice for a first-time founder trying to avoid common decision-making mistakes? Would you be willing to talk or answer a few more specific questions? hjconstas@docforge.net