Hey, Luca here, welcome to a weekly edition of the💡 Monday Ideas 💡 from Refactoring! To access all our articles, library, and community, subscribe to the full version:

Resources: 🏛️ Library • 💬 Community • 🎙️ Podcast • 📣 Advertise

Agent-first development for your org 🤖

This idea is brought to you by today’s sponsor, Warp!

I am a fan of Warp, which I personally use, and I have been in touch with the team for a long time. We even wrote a piece together last year.

Their latest work is Oz — the orchestration platform for cloud agents.

The problem they want to solve is that engineers are shipping code faster than ever, but without good orchestration, individual productivity hits a ceiling fast. Gains don’t compound and leadership has 1) no visibility, and 2) no way to measure impact.

They just released a full report that explores this, where you can learn:

Why 75% of companies fail at building their own agentic systems

How teams save hours per engineer per day by using agent automations

What makes 60%+ of PRs agent-generated actually achievable

1) 🔬 Most AI teams don’t need researchers

… they may not even need AI engineers.

In October I wrote this piece with Barr Yaron, who interviewed (and invested in) tens of founders of AI products.

She reports that many of the best, fastest-moving AI companies she has seen this year have zero papers, zero PhDs, and… zero guilt about it.

If you’re building applied AI products on top of foundation models from big labs, your team might look more like a modern software org: strong in prompt engineering, some MLOps, and good product intuition.

Nearly every applied AI initiative she has seen has started small, and from someone without a strong AI background: a hackathon project, a curious engineer, or an exec tinkering with a model.

Once an early demo proves useful, a small team is born. As organizations mature, many evolve into a platform-plus-product model: an AI platform team builds shared capabilities (evals, inference, data pipelines) and helps product engineers ship responsibly.

You can find the full article below!

2) 📚 I am reading in public, and it’s working

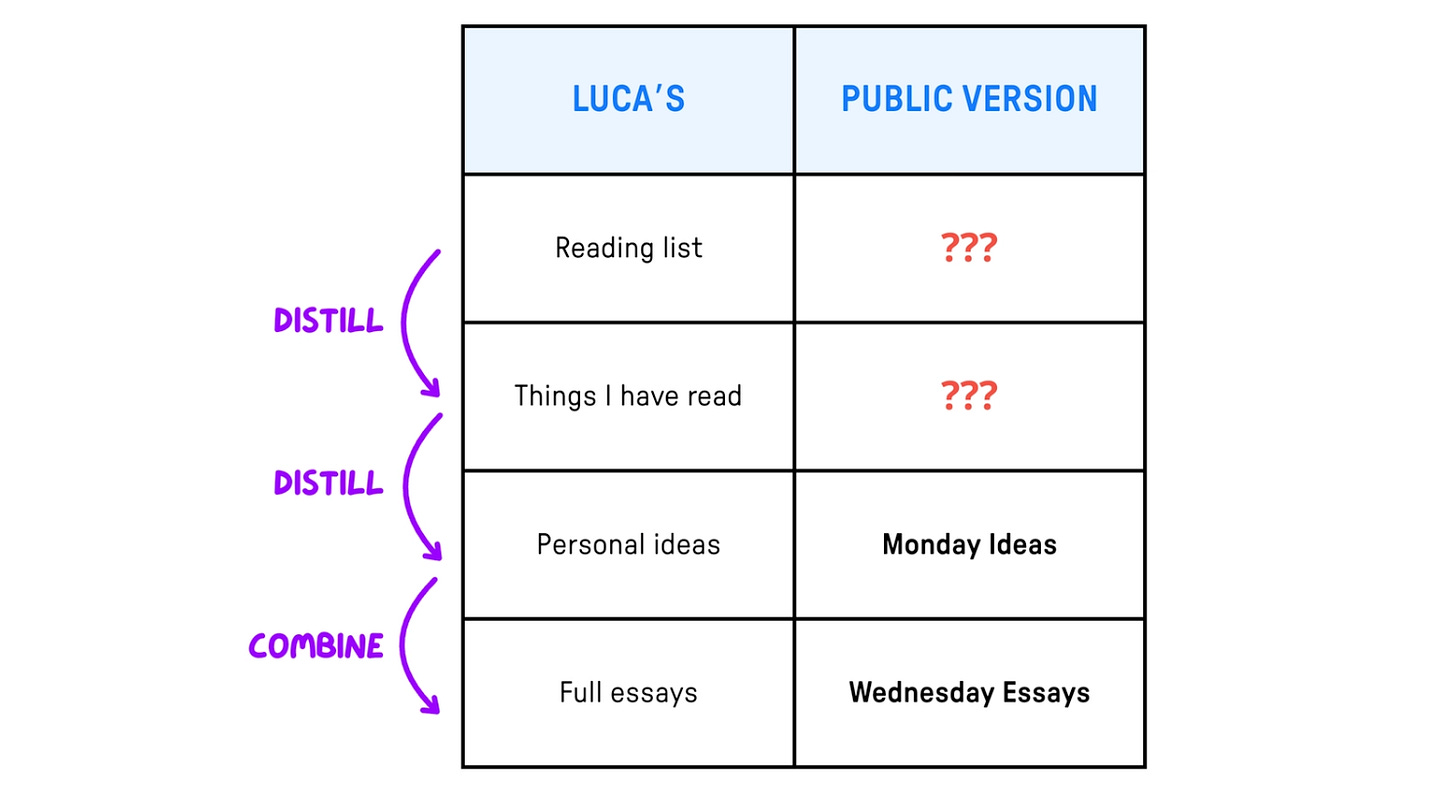

At the end of last year I reviewed my whole content creation workflow.

By looking at the various steps, realized that those I struggled the most with (good reading & research), are exactly those for which I didn’t have a build-in-public version.

So I tried two things:

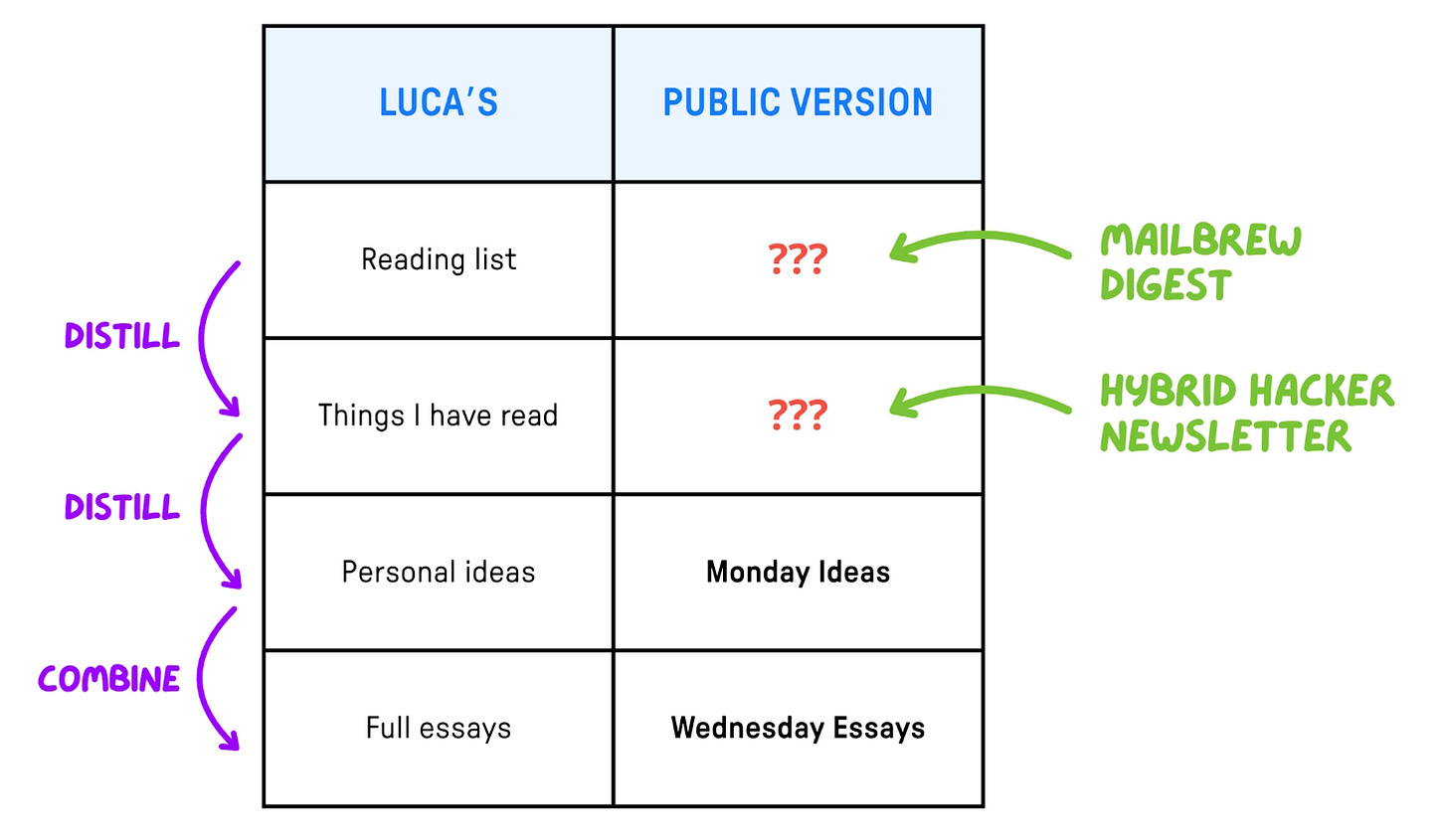

I made my reading list public and subscribable on Mailbrew — you can find it here

I wrote an experimental digest email on Hybrid Hacker, our sister newsletter, containing the best articles I had read the previous week.

Both experiments were a huge success.

I shared my reading list on Linkedin and on the community, and as of today it counts ~150 subscribers, plus I got a lot of warm comments privately. This is not going to be a business whatsoever, so I am simply happy about it.

The digest emails are also getting a lot of great feedback, so I have kept writing them for the past 2 months or so.

Together, these experiments gave me incredible energy and provided the missing incentives to keep the underlying habits tidy. Now I feel a strong need to:

Keep my reading sources relevant and up to date because there are others who depend on them, and

Read more and better because I am responsible for identifying great pieces that I can recommend to readers.

The lesson I learned from this is to try to create practical incentives for the things that I want to keep doing! Full article here 👇

3) 🎙️ Driving AI adoption as a CTO

In October I interviewed Darragh Curran, CTO at Intercom, who famously went all-in on AI by:

Setting a public target of getting the team to be 2x as productive

Spinning off a completely separate AI product, Fin, instead of bolting AI onto Intercom as an afterthought.

I asked him how he drives AI adoption inside the team, and he replied with a four-step framework:

🎯 Set ambition and tone — make it clear this isn’t optional or experimental, but essential.

🚧 Remove barriers — be deliberately permissive about tool choice (Cursor, Claude, Copilot, etc.).

🤲 Make hands-on experience — personally use the tools to understand the developer experience.

⏰ Protect learning time — explicitly give engineers permission to invest time in learning new workflows.

He says the biggest challenge isn’t technical but cultural, and as a manager you need to navigate this through good conversations, plus implementing the steps above.

Many engineers also cite lack of time as the primary barrier to adopting AI tools, creating a tension between delivery pressure and the learning required for long-term productivity gains 👇

“The number one reason folks have said that they haven’t really got up to speed with the tools is they just feel like they don’t have time. So we’ve tried to make very clear to people and their managers that this is important. You can take time.”

Here is the full interview with Darragh:

And that’s it for today! If you are finding this newsletter valuable, subscribe to the full version!

1700+ engineers and managers have joined already, and they receive our flagship weekly long-form articles about how to ship faster and work better together! Learn more about the benefits of the paid plan here.

See you next week!

Luca