Vibe-coding workflows 🪄

A top-down process for doing AI coding right, plus a full cast of supporting techniques.

Hey everyone! Our most popular article over the last few months is—maybe unsurprisingly—about how to use AI in engineering teams.

It got an awesome reception, but it was mostly a qualitative piece, giving you direction and trying to predict what comes next.

So, after that, many of you reached out via email asking for more. In particular, you asked for real world use cases, complete with prompts and everything!

Today we are doing just that, by bringing in Justin Reock, deputy CTO at DX, who analyzed data from DX customers about GenAI tool usage, use cases, and impact. Justin and his team also collected insights from interviews with S-level leaders who have successfully rolled out AI code assistants to thousands of developers.

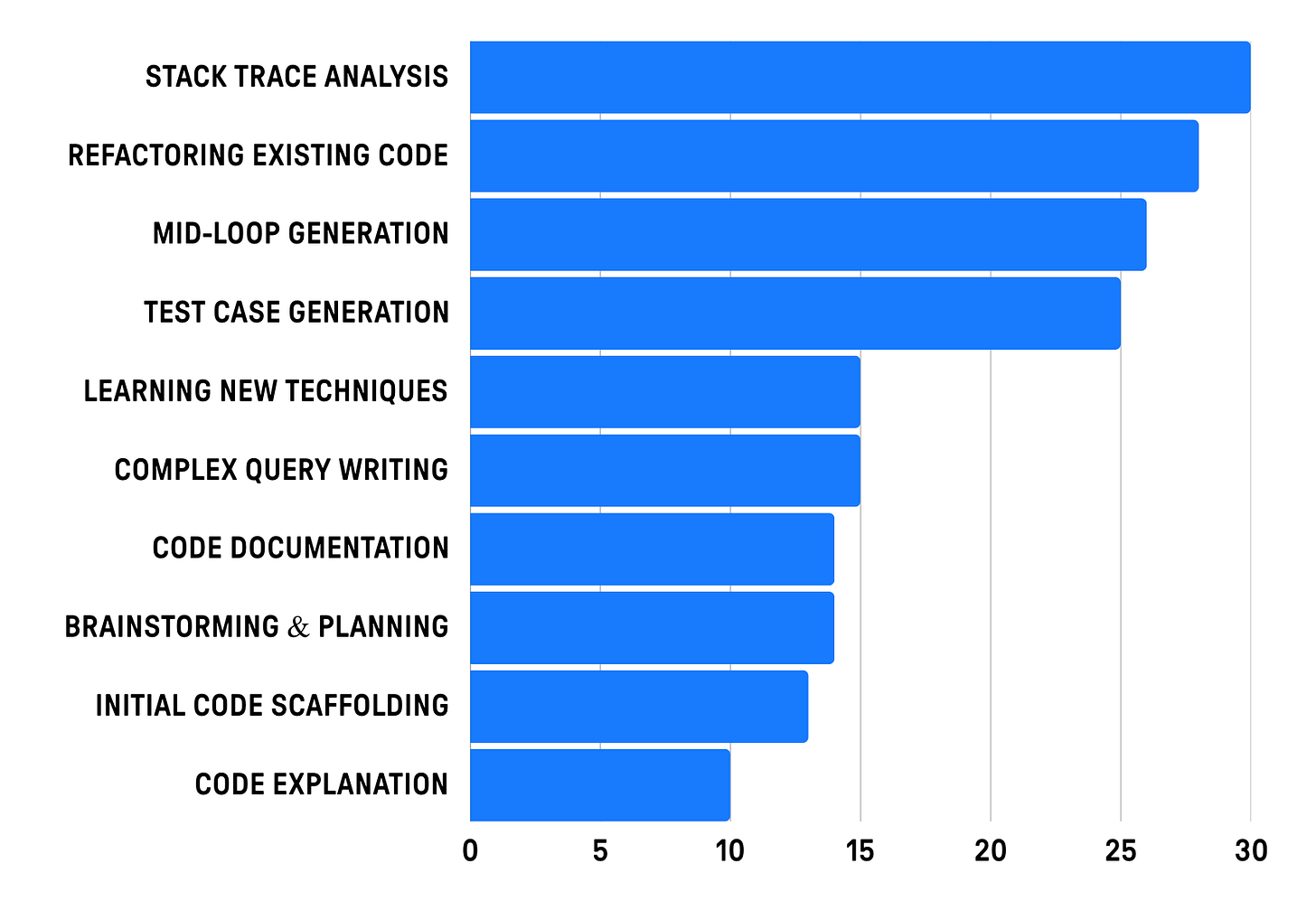

The result is a list of the ten most impactful AI use cases in engineering, based on a survey of developers who have self-reported at least an hour of time savings a week using AI assistants 👇

Today we are covering all these techniques, including examples and prompts. We are also going beyond the mere list: we are using this as a starting point to synthesize a basic workflow for vibe-coding successfully.

Here is the agenda:

🗺️ Vibe-coding workflow — how to do it right by working top-down, optimizing cognitive load, and intercepting errors at the right level.

🛠️ Supporting techniques — a tool belt of ideas to leverage AI across the whole coding spectrum.

Let’s dive in!