Over the past few months there has been an explosion of AI tools for coding.

I have an ever-growing list of things to try — Lovable, Windsurf, Bolt, and more — the FOMO is real and I am not immune.

So, as models become more capable, the future of software engineering looks inescapably tied to AI. Yet, there is a growing divide that I find hard to explain:

On one side, people are building incredible things just by… vibe-coding — see Pieter Levels with his latest flight sim.

On the other side, real engineering teams I speak with are… lukewarm — they report decent but not extraordinary gains.

Why is that? I believe it’s because of two reasons:

🛣️ Green-field vs big codebases — most tools out there are optimized for green-field development. And so are most of the examples you can find. Real teams with grizzled codebases can’t do much with e.g. Lovable.

🕹️ Single-player vs multi-player — AI workflows are still largely single-player, and they kind of break when working in a team. Harper Reed also reported on it in this awesome article 👇

My main complaint about these workflows is that […] the interfaces are all single player mode.

I have spent years coding by myself, years coding as a pair, and years coding in a team. It is always better with people. These workflows are not easy to use as a team. The bots collide, the merges are horrific, the context complicated.

I really want someone to solve this problem in a way that makes coding with an LLM a multiplayer game. Not a solo hacker experience. There is so much opportunity to fix this and make it amazing.

Now, the team angle is the most interesting one to me, and that’s what we are diving into this week.

To do so, I am getting some help from Augment — I interviewed their team, collected stories from their customers, took plenty of notes and compared them to the other stories I know of. Why Augment? Because it is built purposely for teams and large codebases, so stories come from real companies, like Webflow, Kong, or Lemonade.

This is not going to be a piece about Augment, of course, but on figuring out what are the emergent coding workflows enabled by AI, and trying to predict where the puck is going.

So here is the agenda:

💼 Many companies are hiring more engineers — especially good companies.

🏢 The #1 frontier challenge is large codebases — that’s where real value is created.

🤖 Robots vs Iron Men — the philosophical divide between AI tools.

☀️ Optimizing for developer happiness — what kind of toil can you give AI?

📊 Tracking AI code is an anti-pattern — don’t fall into temptation.

Let’s dive in! 👇

Disclaimer: I am a fan of what Augment is building, and I am excited to have them as partners on this piece. However, you will only get my unbiased opinion about all the practices and tools mentioned here, Augment included.

You can learn more about Augment below 👇

💼 Good companies are hiring more engineers

Let’s start with some market pulse. The better AI models get, the more you hear doomsday stories about engineers getting out of jobs, only the best will survive, and so on.

I already debunked this idea a couple of months ago, and I stand by that take. In a nutshell: in most markets the demand for software is elastic, that is, if the price per unit of output drops, the demand grows by at least the same amount.

You can visualize this in a simple thought experiment: if your team could magically produce 3x the amount of software as today, how would you use this power? Would you reduce your team, or would you keep it as is to grow faster? For companies that are profitable and/or hungry for growth, the right answer is obviously the latter.

Folks at Augment also report on companies who are hiring more engineers because of AI, experiencing the famous Jevon’s paradox: in some areas the demand for software is highly elastic, which means it grows more than linearly in response to price decrease.

So I believe the response to AI will depend on the type of org you are in:

📈 Top teams — that make the most out of engineers, where teams ship fast, often, and with little overhead, will hire more engineers because of AI productivity.

📊 Average teams — where engineers create value, but are constrained by bureaucracy and the cost of coordination, will simply stay put.

📉 Poor teams — where tech is a commodity and value is capped, will shrink in size to optimize costs.

So this is the future, but where are we today? And what do we need to make this happen?

🏢 The #1 frontier challenge is large codebases

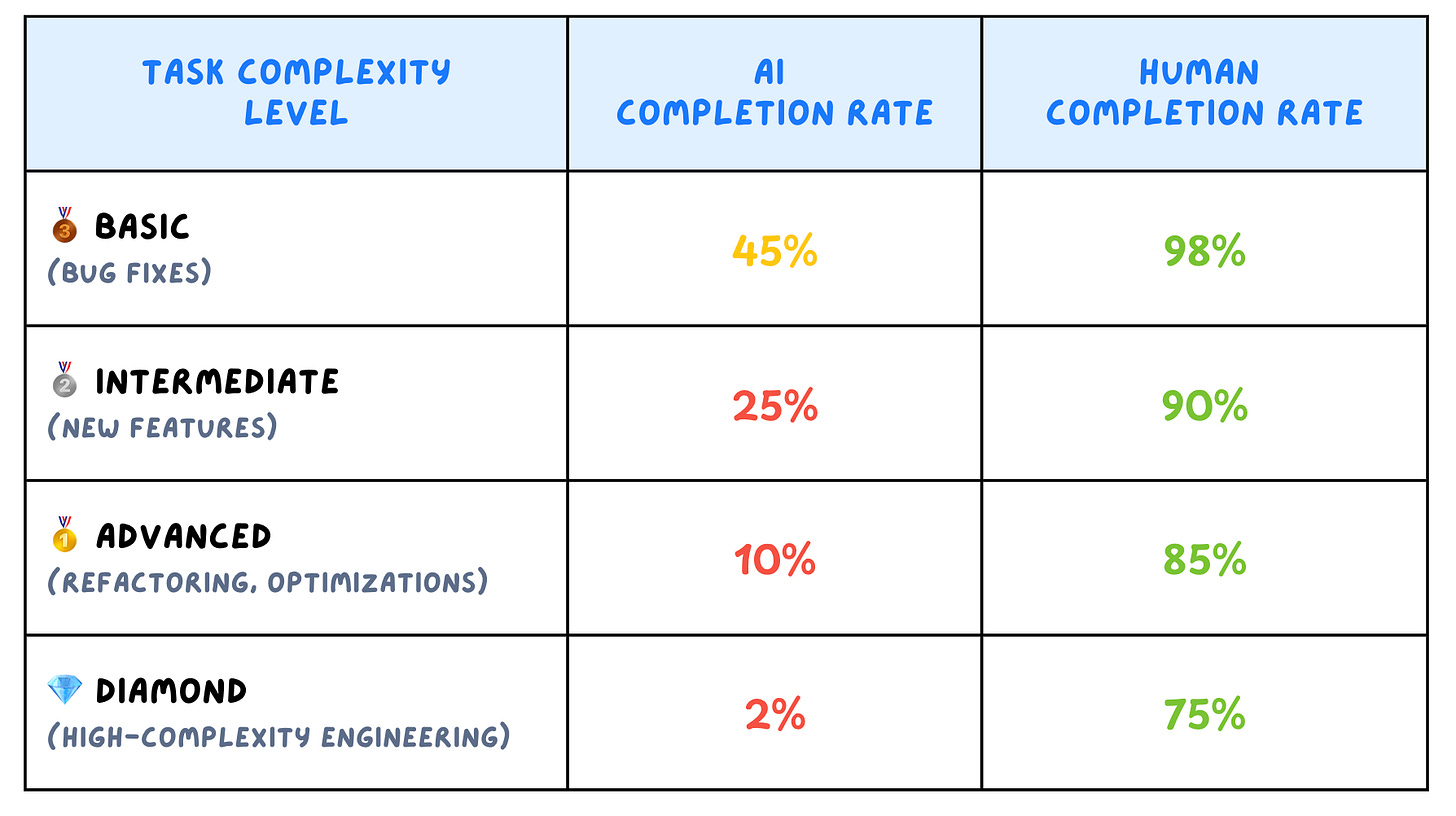

Today’s models are already performing like top software engineers in pure algorithmic problems. Already last October, Claude 3.5 was able to autonomously solve 86% of a random set of LeetCode problems, from all ranges of difficulty.

However, faced with real freelancing tasks, this percentage drops to 26%. Check out this OpenAI benchmark from just three weeks ago.

This difference is all about context. As a senior software engineer from OpenAI noted:

"AI can generate code snippets well, but when it comes to fitting them into an existing project, it often fails due to a lack of context."

Also, these benchmarks are for the previous generation of LLMs. The recent releases of Sonnet 3.7 and GPT 4.5 are moving the intelligence bar higher, making context an even more obvious bottleneck.

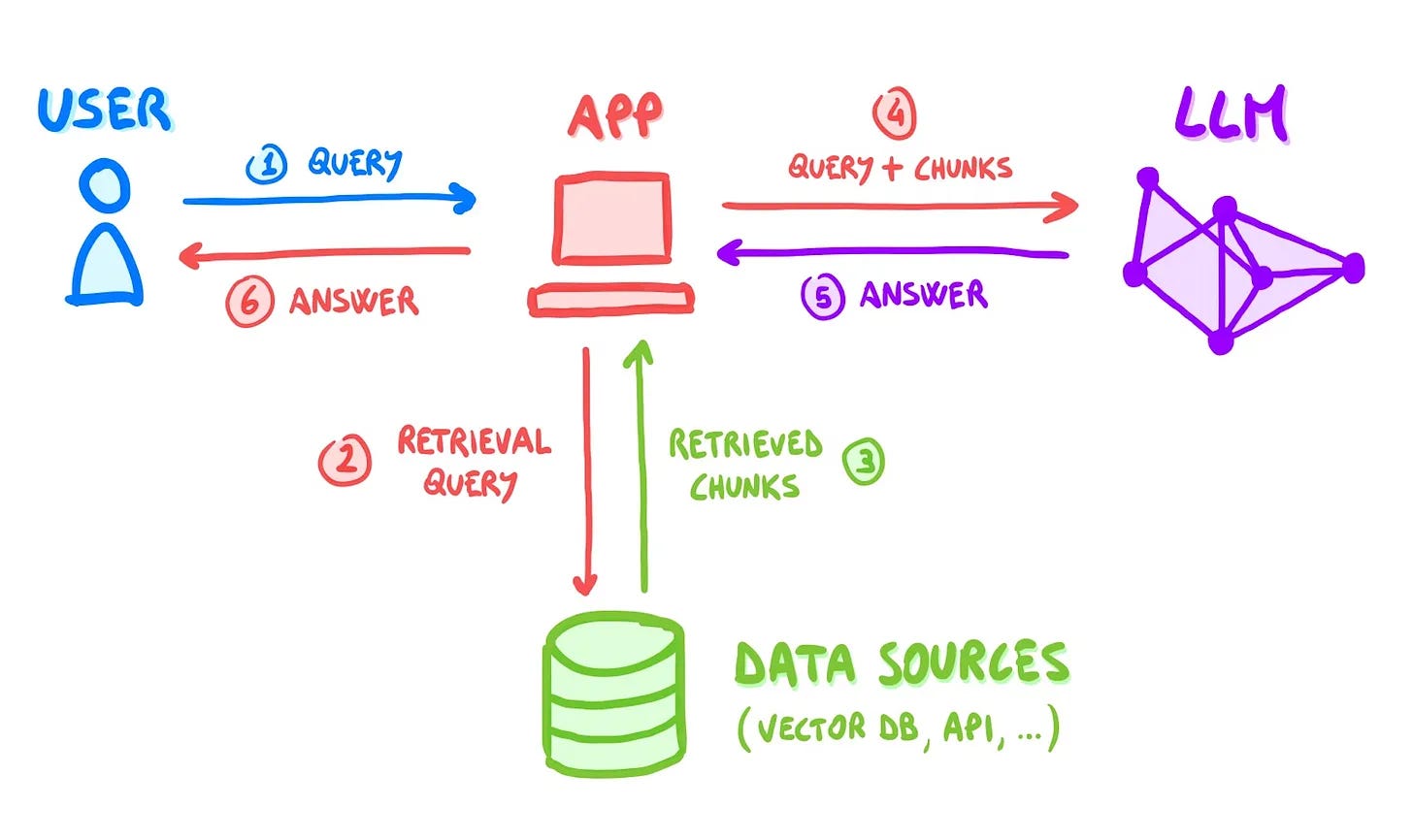

So, when it comes to coding, how is context passed to AI? There are three possible ways: fine-tuning, context window, RAG.

Out of these, only RAG is showing promise. Fine-tuning is unpredictable, slow, and extremely costly; while adding code to the context window doesn’t seem to work at scale and doesn’t guarantee precision.

So, good RAG is the best candidate to scale AI coding tools, but RAG architectures are not a commodity: we are still in the early days of learning how to build them well, for different types of content (code is completely different from e.g. literature), and different use cases. The team at Augment developed their pipeline from scratch and keeps doing an inordinate amount of work on it.

Now, let’s say models are smart enough and context is somehow solved — how are human engineers going to work with AI? 👇

🤖 Robot vs Iron Man

With the current generation of tools, we are witnessing a big divide that is just as practical as it is philosophical.

It’s about autonomous tools vs augmentation tools, or, if you prefer, Robots vs Iron Men:

🤖 Autonomous tools — are tools that behave like independent agents, whose goal is to approximate a coworker. Think about Devin or Lindy.

🦸 Augmentation tools — are tools that give humans superpowers, but where ownership of work is still ultimately human. In software it’s tools like Augment or Cursor.

What does it change, on a practical level? Actually, quite a lot.

Autonomous tools own work: you may ask them for things on Slack and they submit PRs by themselves. Augmentation tools, instead, are aids to humans. You interact with them (e.g.) in your IDE, and the PR is submitted by the human author.

What is the right strategy?

This is not a black-or-white call, as I expect the two to blend over time, but there are fundamental differences nonetheless:

🔄 Feedback loop — augmentation tools have a tighter human feedback loop, because the batches of autonomous work are smaller. Until we are really in the end game of AI engineers, letting AI run autonomously and submit PRs is probably not the most effective way to work with it. You want to intercept errors earlier and iterate faster.

📈 Scaling — while augmenting humans doesn’t pose further organizational challenges, using autonomous agents does. How do you orchestrate work? Do you focus individual agents on specific tasks? Will you need to employ… manager agents to oversee a team of IC agents?

💪 Empowerment — finally, there is a very human angle: I believe in tools that empower humans, as opposed to replacing them. While we are still far from this in either case, but I believe it’s important to point the ship in the right direction and provide the right vision from the start.

In short, I root for AI to help us create the Avengers, rather than the Clone Wars.

So, if the goal is to empower humans — how do you do that? And how are successful teams doing it already? 👇

☀️ Optimize for human happiness

A key insight that I learned by talking with the Augment guys is that the best teams are not maximizing developer productivity — they are maximizing developer happiness.

That is, they are using AI to reduce toil, instead of increasing it.

This is non-obvious: many workflows you can find online corner humans into mostly fixing AI’s bugs. This is never going to work, because if all that people are left to do is toil, they are going to hate their work and eventually quit.

So the question is rather the opposite: what toil can we give AI? There are some solid emerging answers here!

1) Testing 🔍

In a surprising turn of events, TDD is having a quiet renaissance, thanks to AI.

If you ask me, TDD has always been an obvious good workflow, only hard to put into practice because people 1) hate to write extensive tests, and 2) hate to think too much beforehand. So, for many developers and many teams that already have their own share of troubles, that’s too much cognitive load to ask for.

AI is changing all of this, by addressing these issues at their core:

AI is very good at writing tests, removing all the boilerplating load from humans.

AI disproportionately rewards those who think more beforehand. Teams that are getting the best results are those that create good specs and plans and let AI implement them.

At this point, TDD becomes trivial: if 1) you need to write good specs anyway and 2) testing becomes (almost) free, you might just as well have AI create a testing plan first.

So, add that to the AGI conversation. If AI finally convinces us to write tests, it might really be smarter than us.

2) Documentation 📑

The second domain where AI is changing everything is documentation.

Writing docs is the ultimate toil, possibly even more despised than testing or fixing bugs. Good docs are hard to write, hard to organize, and hard to maintain.

So, just like with testing, AI is besieging this problem from all angles:

AI writes (and maintains) high-quality docs effortlessly, from inline comments to full design docs.

AI is able to fetch docs from pretty much wherever they are, reducing the need for perfect organization.

To add insult to injury, AI is able to explain code pretty well even without docs.

This final quality is dangerous, because it can make people think we don’t need docs anymore. We do, because AI can look at some code and explain what it does, but not why it does things that way, nor the context that led to our decisions about it.

So, I expect AI and humans to collaborate on docs in different ways based on the type of information: low-level docs, like code comments and tech specs, may become largely AI-generated, while high-level artifacts like engineering principles, design docs, and ADRs will stay human-driven.

3) Quality ✅

Quality, of course, is not toil, but is the result of a thousand good practices… some of which are kind of toil:

Does code match your standards / conventions?

Are there any smells / obvious improvements?

Are there any security vulnerabilities?

When integrated right, AI is good at spotting many of these, which means 1) you are raising the bar on the software quality you produce, while 2) reducing the cognitive load for your developers.

It’s still early days for tools that help with all of this, but I am already a fan of Augment itself, plus more targeted ones like Packmind and Codacy.

📊 Tracking AI code is an anti-pattern

Finally, a question that I get asked a lot: should AI-generated code be tracked and monitored, like teams do with e.g. their open source dependencies?

My answer kind of goes back to the autonomous vs augmentation divide: if AI does some things completely on its own, then of course you need to track how it fares against regular human output.

But as long as AI augments human work, then how can you really separate AI code from human code? And also, what’s the point?

To evaluate the impact of AI, my suggestion is to focus on overall productivity and developer satisfaction. Are developers happy with the tools? Do they want to keep using them? Are you able to ship faster?

📌 Bottom Line

And that’s it for today! Here are the main takeaways:

📈 Companies are hiring more engineers, not fewer — top organizations are experiencing Jevon's paradox: as AI makes development more efficient, they're expanding their teams to create even more software.

🤝 AI workflows need to become multiplayer — current AI coding tools are optimized for single developers working on greenfield projects, but real teams need collaborative AI experiences that work across shared codebases.

🏢 Large codebases remain the frontier challenge — while AI excels at algorithmic problems, it struggles with real-world tasks in existing codebases due to context limitations.

🦸 Augmentation beats autonomy — tools that empower humans create tighter feedback loops and better results than fully autonomous coding agents that try to replace engineers.

😊 Optimize for developer happiness, not just productivity — successful teams use AI to reduce toil rather than increase it, focusing on making engineers' work more fulfilling.

🧪 TDD is having a renaissance — AI excels at writing tests and rewards teams who create good specs upfront, making test-driven development more feasible and less burdensome.

📝 Documentation gets an AI makeover — AI is transforming docs by writing code comments and technical specs while humans focus on higher-level design docs and architectural decisions.

✅ Quality becomes less burdensome — AI helps enforce code standards, identifies smells, and catches security vulnerabilities, raising the quality bar while reducing developers' cognitive load.

📊 Tracking "AI code" is an anti-pattern — when AI augments human work rather than replacing it, separating "AI code" from "human code" becomes counterproductive; focus instead on overall productivity and satisfaction.

If you want to learn more about how AI can help your whole team and—especially—your whole codebase, you should definitely check out Augment 👇

See you next week!

Sincerely

Luca

@luca Rossi, thoughts on

" think eventually 90% of the code will be written by AI (as Anthropic's CEO said last week), and engineers will work on higher level tasks: good system design, translating requirements into specs, technical strategy, buy vs build, etc.

But I don't believe this will happen anytime soon"?

Incredible article. Thank you!

Any chance you could rewrite this and update it based on the latest releases of Claude and OpenAI? In particular how it impacts AI completion rates. Thank you!