Hey! I am incredibly excited to finally release our industry report on how engineering teams are using AI.

We have been running a big newsletter survey for the past two months, plus we held 1:1s and sat with several engineering teams to figure out what they are doing. The result is a ton of data points, both quantitative and qualitative, which should help us navigate what is it that teams are getting right, wrong, the challenges, and the opportunities of using AI in the software development lifecycle.

We have been doing this together with the team at Augment Code, which provided plenty of support and funded part of the work, making it possible. This is the largest scale research we have ever run at Refactoring, and it was possible thanks to them!

So here is the agenda for today:

🌐 Demographics — let’s look at who replied to the survey.

🙋♀️ Personal adoption — how people are using AI for individual work.

👯 Team adoption — how companies are adopting AI at a team level: practices, process, use cases, and challenges.

🎓 Skills & Jobs — how AI is impacting the job market in terms of skills, hiring, and seniority levels.

🪴 Adoption path — finally, we’ll try to put all of this together and sketch an adoption journey made of a few key steps, that takes from all the answers we got.

Let’s dive in!

🌐 Demographics

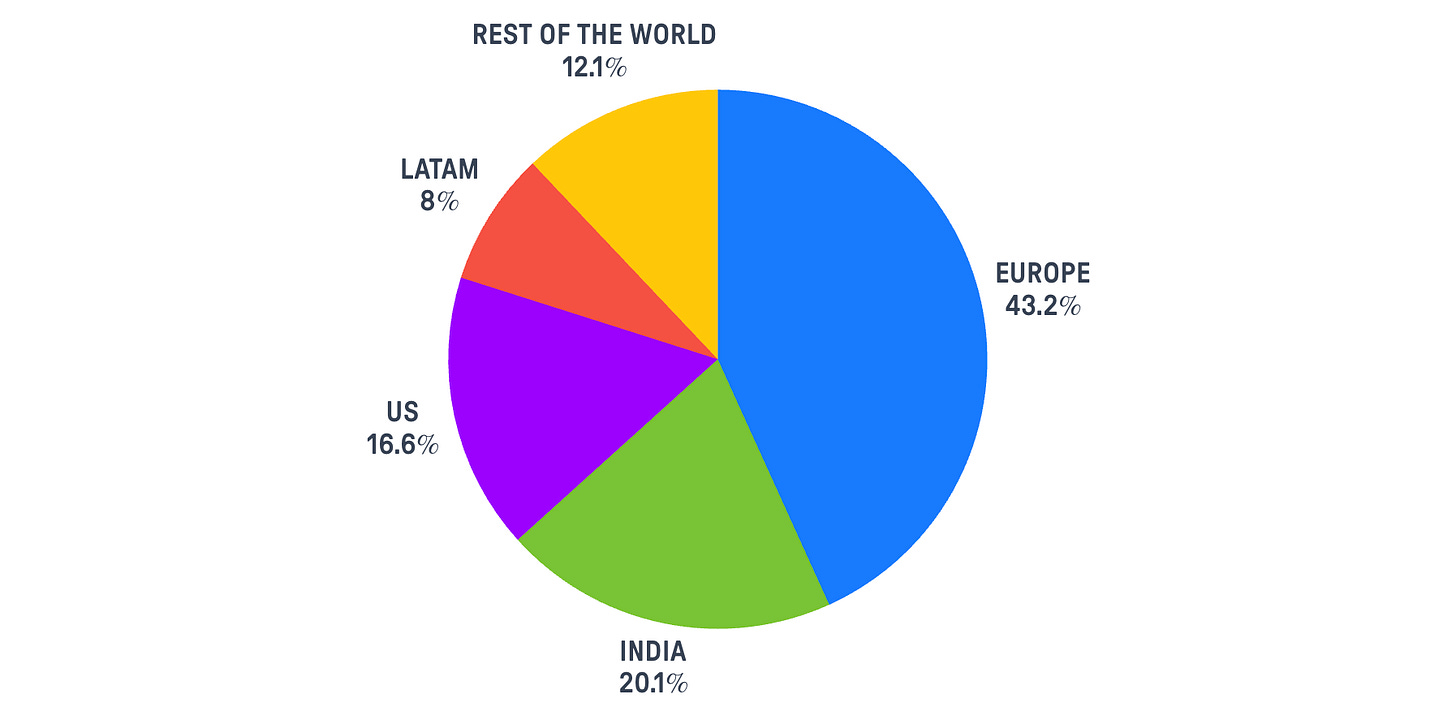

We collected 435 respondents to the survey.

As we did with other surveys in the past, we intentionally went for quality over quantity, which meant the survey was substantial, with plenty of free-form questions that took a while to answer.

We took this route because AI is an extremely nuanced topic, and in many cases we didn’t want to pidgeon-hole answers by making people choose from predefined lists for things.

A lot of free-form answers also mean a lot of manual work to clean up, categorize, and collect insights from such data. We reviewed every single answer, attached tags to it, and drew correlations.

Here is more about the people who joined this:

1) Geographies

Respondents come from all over the world, with the following breakdown:

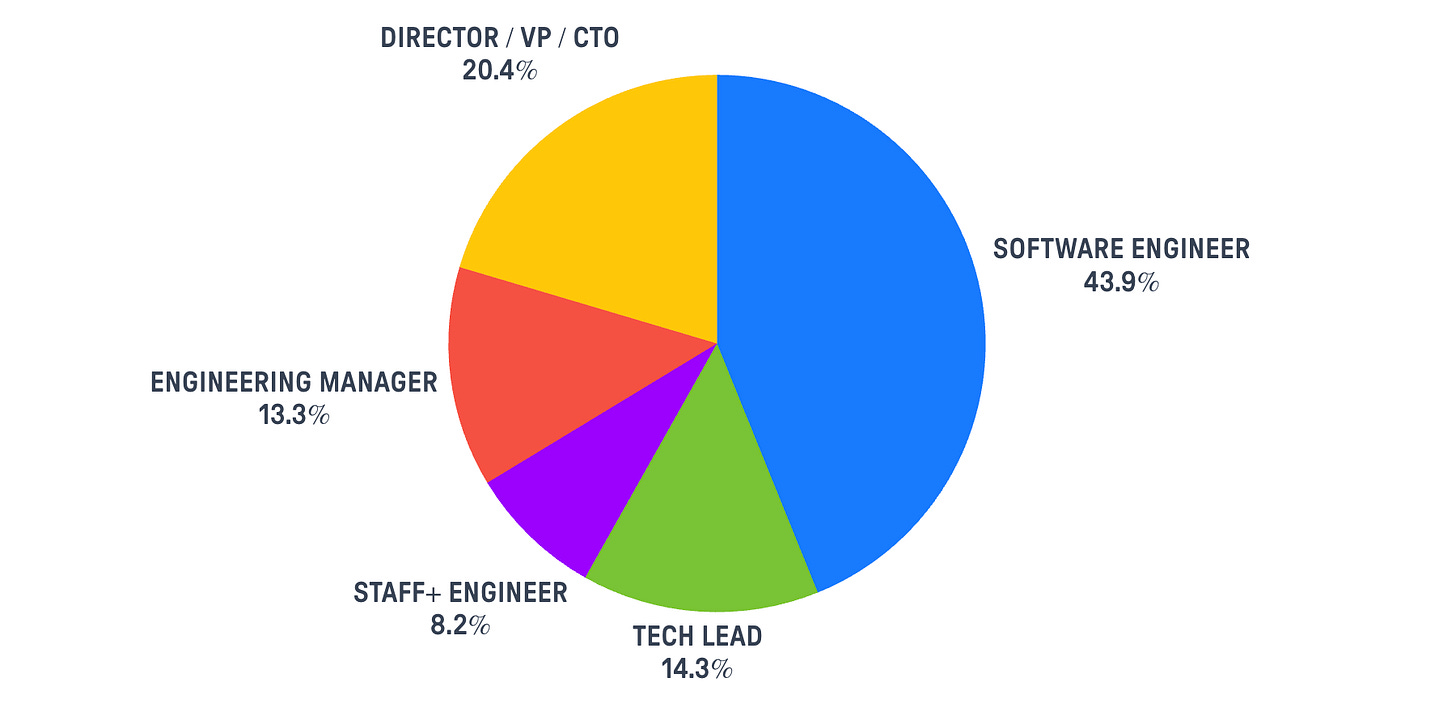

2) Roles

About 52% of the respondents are pure ICs, while 34% are managers. There is also a 14% of tech leads that falls pretty much in the middle.

All in all this fits the distribution we have seen in previous surveys, with ~60% ICs and ~40% managers.

🙋♀️ Personal adoption

About personal usage of AI, here are our key insights:

1) Personal usage is strong

77% of respondents use AI daily.

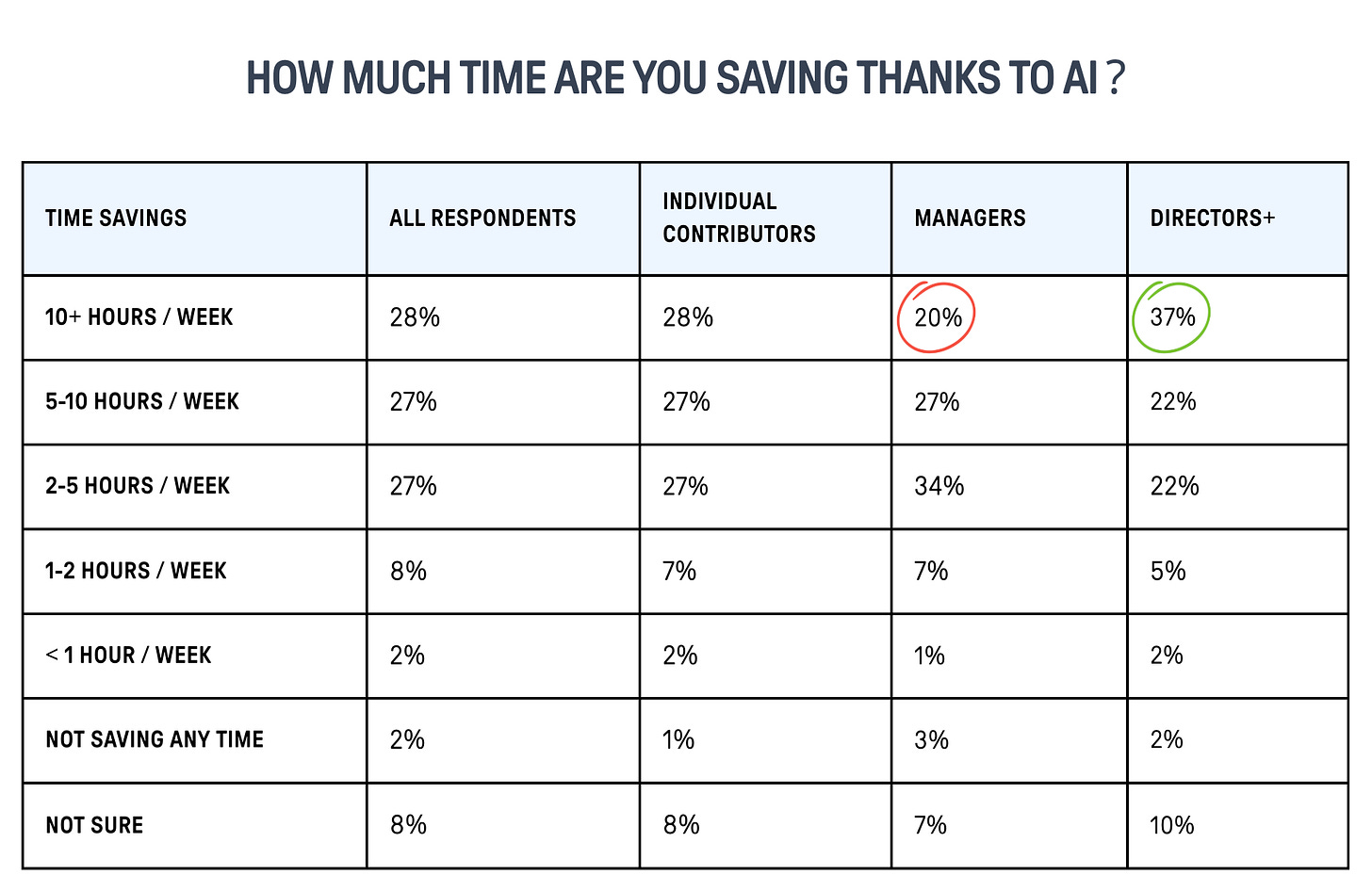

54% estimate saving 5+ hours / week with it, while 27.6% save more than 10 hours / week. Breaking down by role, Director+ roles are saving the most time, followed by pure ICs.

Engineering managers are somewhat of the worst cohort. By looking at individual answers, the feeling is that a lot of classic line management has not been meaningfully transformed by AI (yet).

Speaking of use cases 👇

2) Coding is #1

We asked people to input on the best ways AI is helping them with work. Coding is #1 across all roles, with automation of repetitive tasks being a close second.

But things are a bit more nuanced than this — when you look into the coding use cases, most of them are, in fact, about automating repetitive tasks: testing, boilerplating, recurring updates, and so on.

So, AI is helping a lot with simple things, and, for many people, these simple things are a lot. Other simple quality of life improvements outside of coding include:

Doing things with voice instead of typing

Summarizing meetings and docs

Finding information faster, both online and in the workspace

Data entry on spreadsheets and docs.

[AI is a] typing accelerator for simple and repetitive tasks.

— Engineering ManagerAutomating repetitive transformation tasks where it would take me longer to write a script to do it for me.

— Staff Engineer

3) Managers are back to coding

Another meaningful pattern is engineering managers who are back to coding because of AI, which shows both in overall numbers (coding is the #1 AI use case for managers too) and in individual stories.

AI is helping managers do more coding in two ways:

🧠 It reduces the cognitive load — managers often retain good taste for what good code looks like, but are a bit rusty on implementation details. AI makes the latter less relevant.

🗓️ It fits coding into packed agendas — by letting terminal agents run while they are on meetings or doing something else.

Using agent’s to get coding done while I’m in meetings, and lowering the bar for automation.

— Engineering ManagerWe had to perform a large-scale renaming and reorganization of files in a Ruby codebase (with no IDE support). I cursor-churned for 65 minutes, but I was free to do something else.

— CTO

4) Documentation is a secret weapon

We also compared the preferred AI use cases of all respondents to those only from people who are very satisfied by their use of AI, to understand if there is any secret these folks have figured out, and found interesting numbers.

People who are the happiest about AI use it less for high-value tasks like problem solving and research, and more for more mundane tasks — especially documentation.

Helping me on writing deep specifications I do have in mind via voice command which is quickly, and also prepare internal presentations so that I can explain concepts to everybody easily with draws.

— Director of EngineeringDraft clear and structured PRDs, meeting notes, and stakeholder updates quickly, which saves time and ensures alignment across teams.

— Tech Lead

Using AI for documentation creates a great feedback loop, because docs not only benefit humans — they benefit AI as well, which then is able to 1) understand code better, and 2) provide better answers about the codebase, which is another widespread use case.

5) Concerns

Code quality is by far the #1 concern in personal AI adoption, for all roles involved, closely followed by de-skilling:

🎽 Team adoption

If we expand the scope to the team, we see somewhat a different picture. By now, 77% of teams formally recommend using AI tools, but the adoption process is chaotic.

Management is largely happy to provide access to the tools that engineers ask for, but things usually stop there. The vast majority of respondents have no shared guidance around workflows and practices that go beyond personal usage.

Management provides access to a bunch of tools (cursor, windsurf, amp, claude) and engineers pick what works best for them.

— Engineering Manager

The lack of top down—or just shared—direction not only hampers the amount of benefits the team as a whole can get from AI; it also fails to engage the minority of skeptics that exist in almost every team:

[Adoption process is] a mix between top down and participated. There’s some ICs really engaged but it’s required a lot of top down to get traction. Still, many ICs don’t use even the most proven use cases.

— Director of Engineering

The main reason for this is that, simply put, we are still at a stage in which no one knows what they are doing.

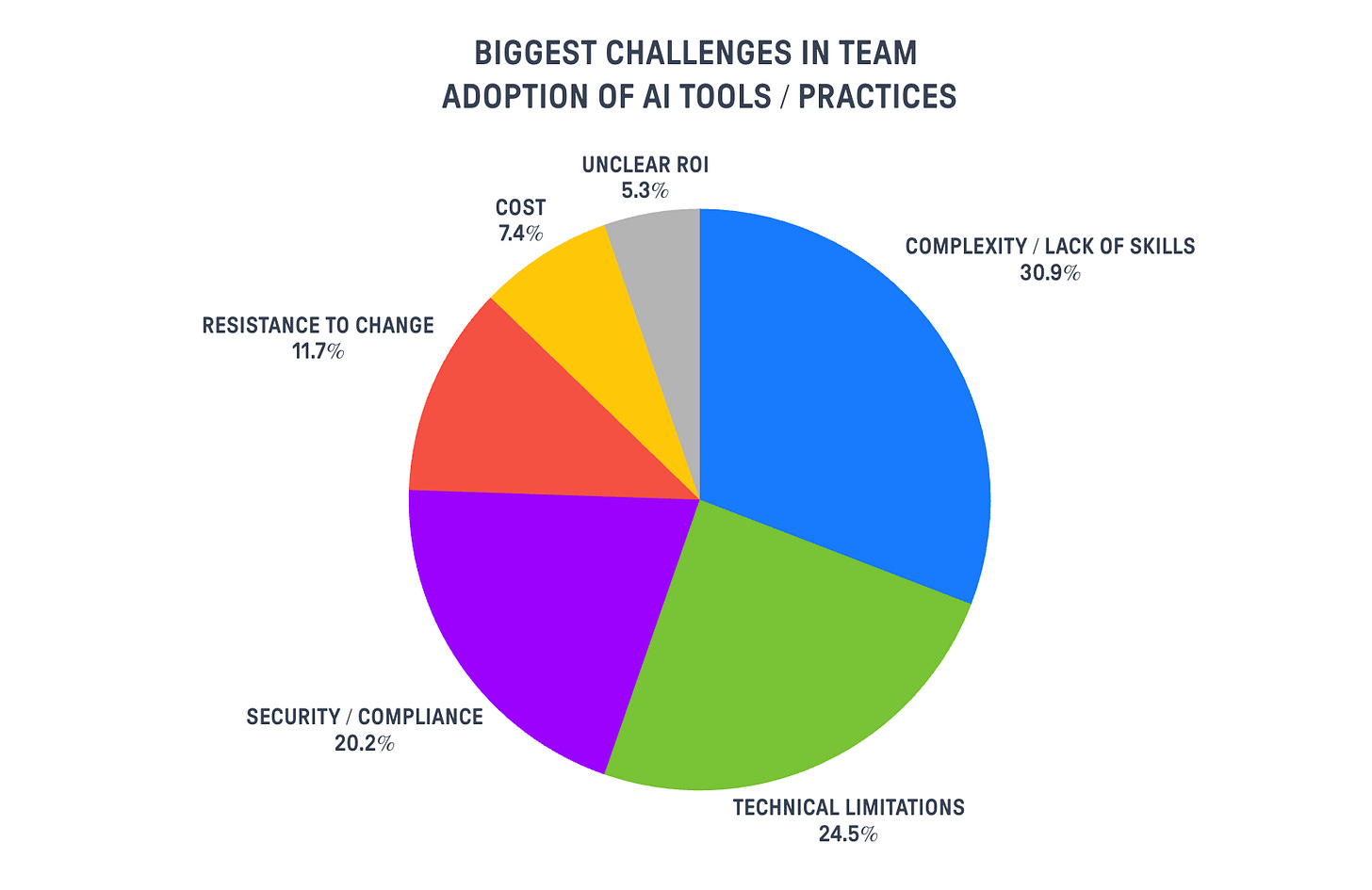

When asked for the biggest challenges in team AI adoption, the #1 factor mentioned by engineers is the lack of best practices and volatility of tools:

Most teams realize the quality of outcomes vastly changes based on context design and workflows, but very few are actually investing in learning these skills through dedicated resource allocation. Most engineers are just expected to learn on the job.

Lack of understanding / training of how to best leverage tools and how to provide correct context. Lack of experience. Not enough sharing of best practices.

— Director of EngineeringThe usage of AI needs to be learned on the job while adhering to deadlines.

— Software Engineer

There is also less incentive to invest in shared practices when there is the perception that these may become obsolete in just a few months (or weeks).

Time to experiment, and in general access to good information about constantly evolving best practices.

— Engineering Manager[The main challenges are] learning curve, AI tools “fatigue” (best workflows change too often) developer workflows differ (remote team).

— Tech Lead

Technical limitations are also a big factor. Testing AI performance and double-checking output is perceived as hard, and sometimes not worth the effort.

While code generation speeds up writing code, debugging takes more effort and time. — Director of Engineering

While it solves problems, the larger chunks of code output aren’t very readable/maintainable and are pretty obvious from code review, which increases the code review burden.

— Tech Lead

💼 Skills & Jobs

We used the last section of the survey to understand how AI is changing hiring, and what engineers think about the future.

1) Headcount is not changing a lot

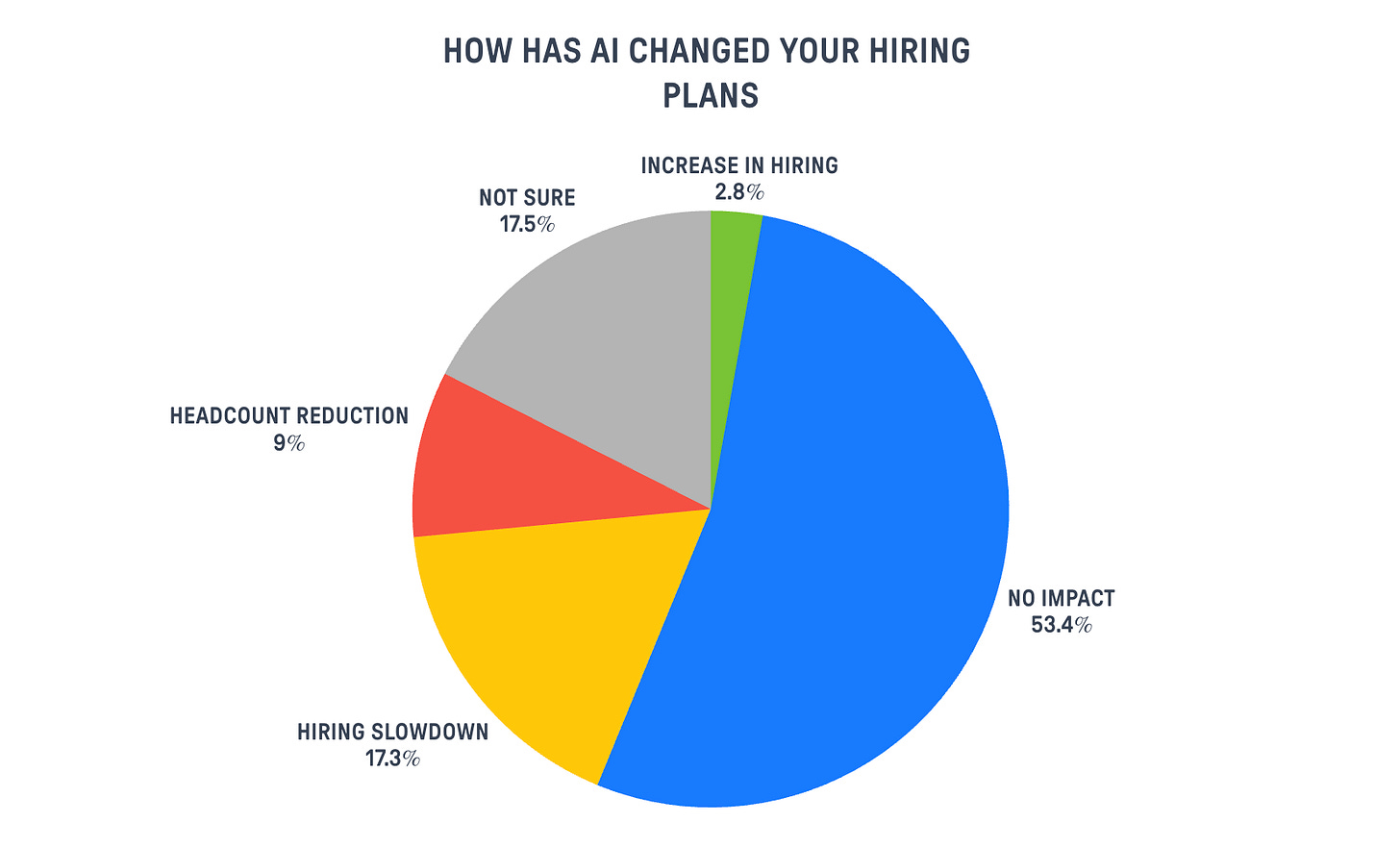

About 29% of respondents report changes to their hiring due to AI:

In most cases, it’s a hiring slowdown, with some instances of headcount reduction, and extremely few about hiring increases.

However, in our opinion these numbers are inconclusive about the impact of AI on org sizes and compositions. A lot of the slowdown stories seem to be defensive against the uncertainty around what types of roles, skills and seniority levels are now needed, rather than the result of considerations about throughput.

About headcount reduction instances, it’s also hard to sort those that are truly about AI from those that come from the tougher market conditions, and would have happened anyway.

2) Execs believe they will need more engineers

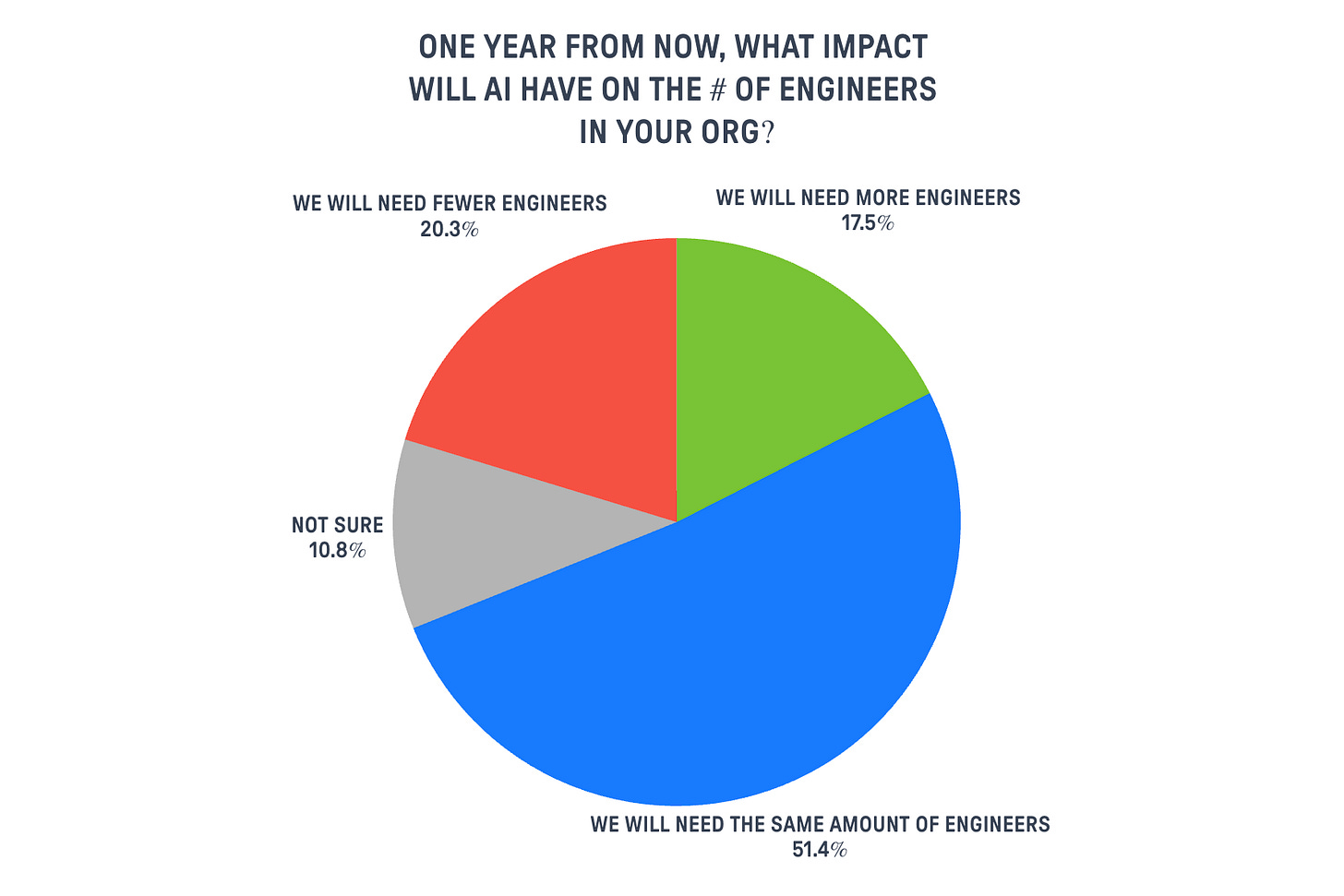

Engineers are largely skeptical that AI will permanently lead to fewer engineers:

Even more significantly, Directors, VPs and CTOs are the most skeptical about it.

Only 11% of them believe they’ll need fewer engineers because of AI in the future, while 26% believe they will need more 👇

As cost of delivery goes down, more use cases have positive ROI so demand goes up.

— Director of EngineeringWe will continue to grow headcount. AI will simply increase the productivity and output of that headcount.

— Director of Engineering

3) Skills are changing

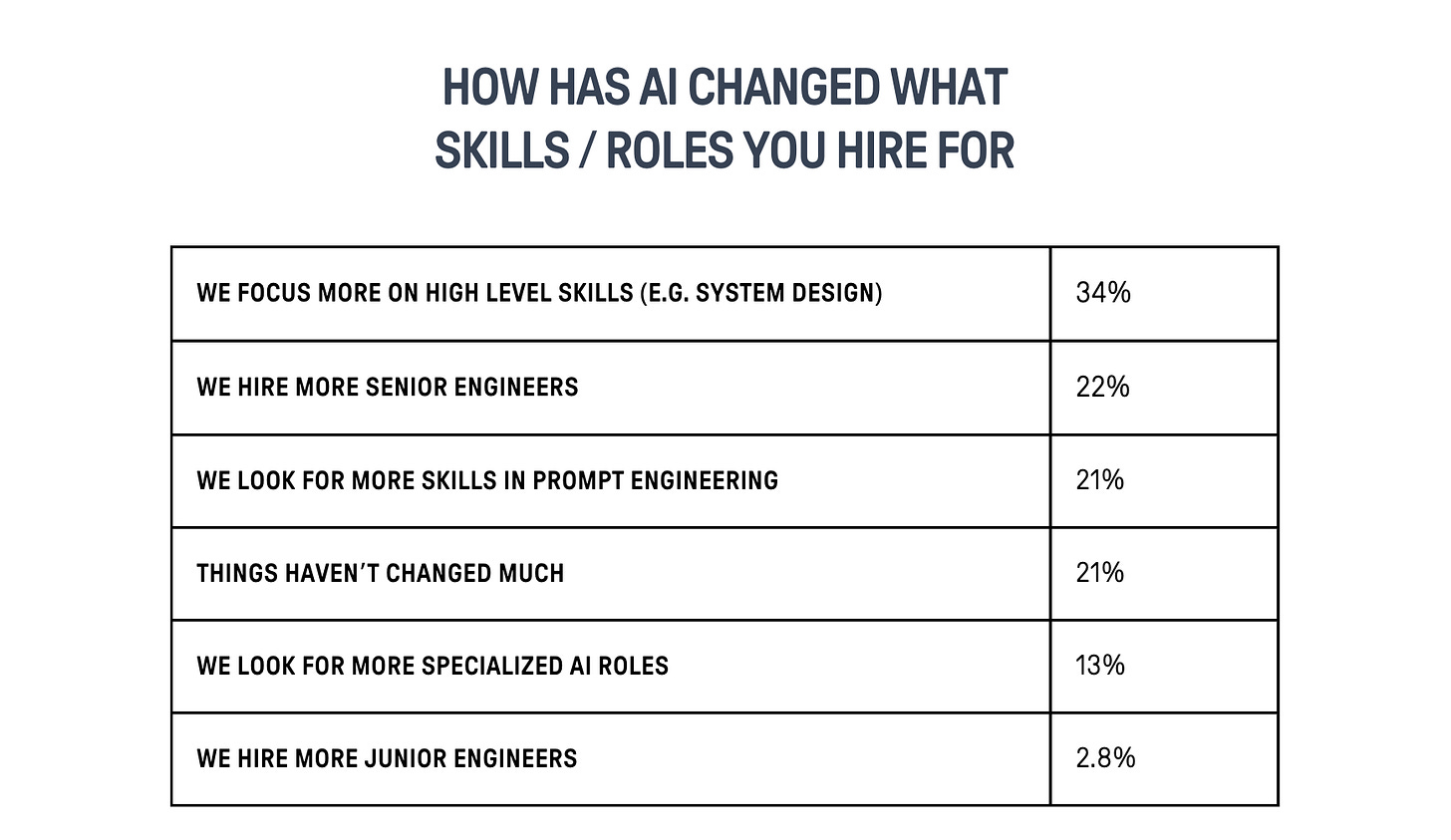

For 73.2% of respondents, AI has changed the skills they look for, with the #1 effect being a stronger focus on high-level engineering chops (e.g. system design) vs expertise on specific languages and frameworks.

4) Interviews are changing

A common challenge for hiring managers is filtering against over-reliance on AI and downright AI cheating. Several respondents mentioned changes in their interview process, including:

Removing take-home assignments.

Reintroducing onsites.

Removing code challenges (a-la LeetCode) in favor of broader system design chats.

Our requirements haven’t changed but it is getting harder to separate the wheat from the chaff, e.g. we are no longer considering take-home assignments as one of the components of our selection process.

— Software EngineerWe have to filter harder for people who don’t over-rely on AI tools or use them to cheat on interviews.

— Engineering Manager

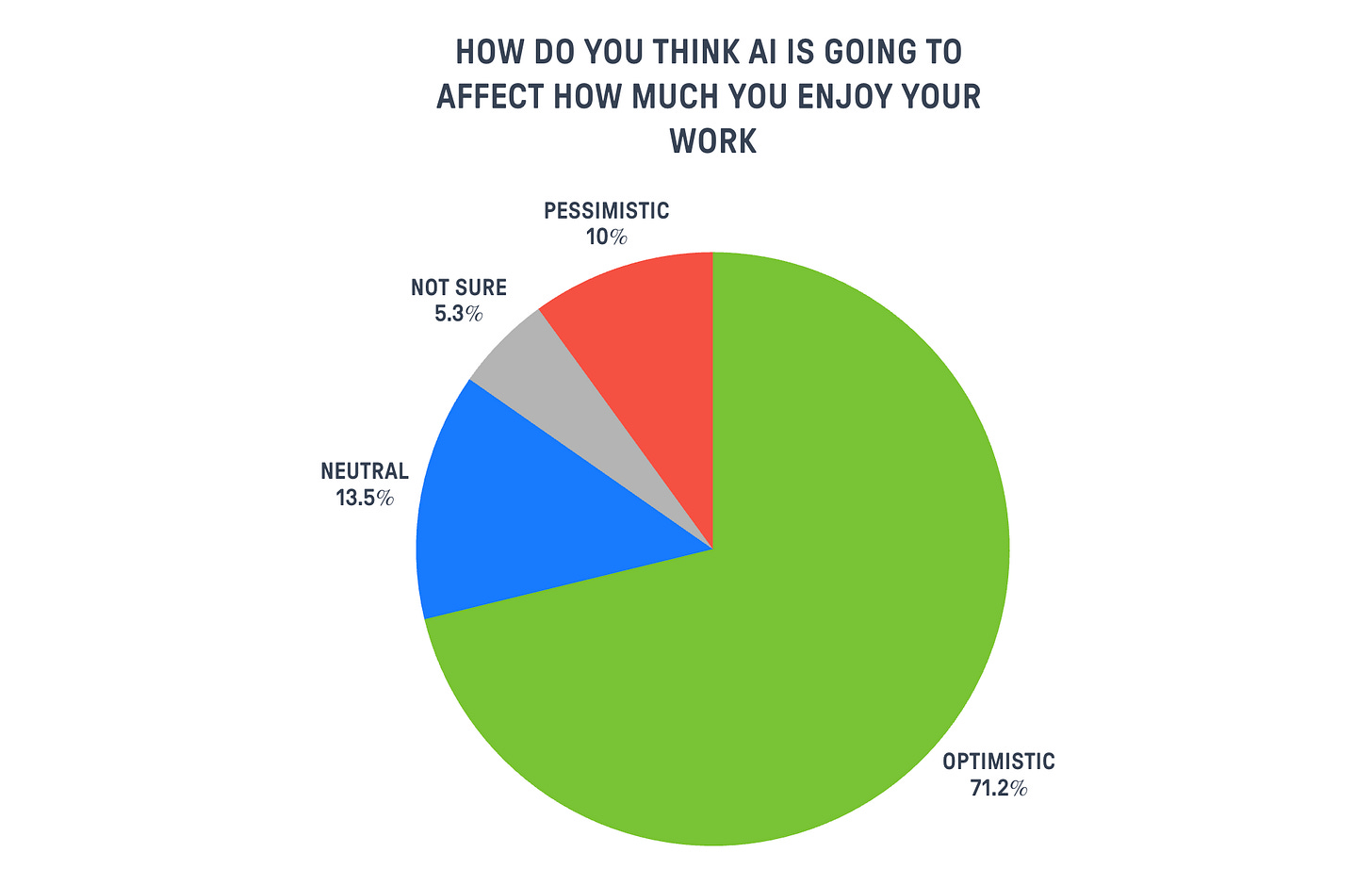

5) Engineers are optimistic

Overall, people from all roles are optimistic that AI will make their jobs better and more enjoyable.

You wouldn’t probably guess it by surfing online, but it seems there is a silent (vast) majority of engineers who truly enjoy using AI and look forward to using it more.

Here are some significant quotes 👇

The balance between operation and strategic hours in our work days will concentrate more on the strategic side, which for me is where we generate value.

— Director of EngineeringIt’s taking away the toil work and leaving more space for the challenging things I can run with.

— VP of EngineeringI spend less time with repetitive and boring work, and more on study and what really matters.

— Tech LeadI’m delighted when an agent completes a task for me without me having to lift a finger. I see AI doing the boring, simplistic tasks for me while I focus only on the tasks that require human thinking.

— Software EngineerI sometimes feel like it’s a superpower that help me skip the bs and focus on what interests me (building great products).

— Staff Engineer

6) Future predictions

Finally, we asked respondents to bet on future scenarios.

By combining the most popular ones, the future that people in tech expect the most is one where:

We won’t write code almost anymore

We’ll work in smaller teams

But the overall headcount will not shrink

As mentioned before, there is some concern among ICs about the need for fewer engineers, but this drops drastically when we ask the same to Directors, VPs, and CTOs.

Do they know better? Or is it the classic disconnect between upper management and the trenches where real work happens? It’s hard to say — director+ folks seem to be at once more optimistic about the impact of AI, and also more conservative when it comes to how it will change workflows and orgs.

They are bullish about the quality of software actually improving because of AI, are not convinced AI will write most of it, and are also less concerned with the fate of senior engineering talent.

In other words, they believe software will get better, engineers are here to stay, and we will need more of them.

🪴 Adoption path

Based on everything we have seen, both numbers and individual stories, successes and failures, let’s try to write down some recommendations.

If we look at the AI adoption journey, I believe we can identify three main steps: Explore, Embrace, and Empower.

Let’s look at each of them:

1) 🌱 Explore

The first adoption step for almost all teams looks like personal exploration. Engineers get themselves more familiar with AI tools, figure out what they are good at, and learn the ergonomics of embedding AI into daily work.

This exploration can be facilitated by management but it largely remains a bottom-up initiative that is up to individual engineers’ agency and proactivity.

So what can managers do to encourage AI usage? Here is what works best:

👏 Provide encouragement — making tools available but without expectation for performance gains.

👑 Identify champions — empower the most enthusiastic individuals to try tools and workflows and report back. Do the same with entire teams to create initial success stories.

🔄 Create knowledge sharing avenues — through ceremonies where people can demo wins and spread emerging best practices.

The goal of this first step is to create awareness and momentum through some initial wins. Here's what these look like for most teams:

Small automations that replace manual work

Small features mostly written by AI

Small refactoring / migration tasks that otherwise would never get done

More docs and tests written thanks to AI

2) 🪴 Embrace

After the initial exploration has created a basic level of proficiency across the team, it’s time to embrace adoption and graduate it into team practices that go beyond individual usage.

For most teams that got here, these look suspiciously similar to just good engineering practices — following the mantra that what’s good for humans is good for AI.

So, good candidates are:

Having AI do first pass code reviews

Enforce better testing standards

Enforce better docs standards

Moving some docs inside repos for better context

Good meeting summaries available to everyone

Teams who are the happiest about AI adoption consistently use it to 1) improve quality and 2) reduce cognitive load for developers, by taking on grunt work.

It’s important at this stage that things happen at team level and turn from nice-to-haves into actual standards. For example, if we all agree AI makes writing tests easier, walk the talk by enforcing the presence of tests in PRs. If some docs now live inside the repo, for both human and AI convenience, reject PRs that do not also update those docs.

You might feel uneasy about these, but hey, if it doesn’t feel somewhat risky, is it real change? To get real gains over the long run that go beyond simple code completions, we need to put something at stake.

This is also the right time to create proper feedback loops. You won’t get everything right from the start, so it’s important to keep the pulse of how these practices are doing, what people think about them, and tweak them continuously.

There are many ways of measuring gains from AI, but the best way to begin with is just through good conversations. How does this feel? How effective are AI code reviews? Are tests helping? Hold periodic retros, prod people during 1:1s, and keep the feedback going.

3) 🌳 Empower

The final stage is what we call the empowerment stage. If AI takes on some of the busy work and reduces developers’ cognitive load, what do we do with this residual capacity?

The standard, incremental answer is just more of the same. More frontend coding if you are a frontend engineer, more design if you are a designer, and so on. Which is perfectly fine, mind you.

But the best teams are using this capacity to empower people to go beyond their normal boundaries — to expand their scope, instead of simply being more productive at the same stuff.

“[Engineers] shift focus on more business scoped things” — CTO

The way you can do this depends on your role and your team: it could be engineers becoming full-stack, PMs creating prototypes instead of simple specs, designers having a shot at frontend, or else.

The upsides of expanding people’s scope are so many:

🔀 Reduced cost of coordination — fewer people need to stay in the loop to ship things. So it’s fewer meetings and shorter cycle time.

⚡ Higher velocity — people spend more time building, which leads them to accomplish more.

🌱 Stronger professional growth — people learn more by operating on multiple layers, and are more engaged.

📐 Easier scaling — the fewer the layers, the fewer people you need to hire to significantly impact throughput.

This is also where top down vision is required the most. While you may rely on individual agency to plug AI tools into people’s existing work, it takes leadership to change the scope of such work, and how people think about it.

Finally, these layers are not sequential: each of these is happening in some capacity at any given time:

Individuals — explore what is possible with AI on their own.

Teams — embrace what works for individuals to augment how they work as a team.

Leaders — empower both teams and individuals into becoming the best possible version of themselves, by reshaping roles and boundaries.

AI will keep improving and software engineering will keep evolving with it, so we need to keep doing all of this all the time. Is it tiring? Yes, sometimes. But is it rewarding? You bet it.

As engineers, we thrive on understanding things and turning chaos into rules and systems. That’s exactly what we need to do to get the most out of AI.

Let’s get to work!

📌 Bottom line

And that’s it for today! Here are the main takeaways from the report:

🚀 Personal adoption is strong — 77% of engineers use AI daily, with 54% saving 5+ hours per week, primarily through coding assistance and automation of repetitive tasks.

📝 Documentation is the secret weapon — Engineers who are happiest with AI use it extensively for documentation, creating a virtuous cycle where better docs help both humans and AI understand codebases better.

👥 Team adoption lags behind individual use — While 77% of teams formally recommend AI tools, most lack shared practices and workflows, with adoption remaining largely bottom-up and chaotic.

💼 Managers are coding again — AI is bringing engineering managers back to hands-on work by reducing cognitive load and fitting coding into packed schedules through async execution.

😊 Engineers are optimistic — Despite online doom and gloom, 71% of engineers are optimistic that AI will make their jobs better and more enjoyable.

📊 Headcount fears are overblown — Only 11% of Directors/VPs/CTOs believe they’ll need fewer engineers; 26% actually expect to hire more as AI increases throughput potential.

🎯 Skills are shifting upward — 73% report AI has changed hiring priorities, with increased focus on high-level skills like system design over specific language expertise.

🌱 Follow the adoption path — Successful teams progress through three stages: Explore (individual experimentation), Embrace (team-level practices), and Empower (expanding scope for individuals and entire teams).

I want to thank again the whole team at Augment Code for supporting this work!

I am a fan of what they are building. They truly focus on building an AI coding platform for real engineering teams — not vibe coders. You can check it out below 👇

I wish you a great week!

Sincerely 👋

Luca

Thank you so much for sharing ❤️ The Adoption Path is really good to have in all AI First companies.

Section 3, EMs, EDs, and even CTOs going back to coding...

Wow, this is not the flex they seem to think it is. Would they be boasting about spending hours per workday on another hobby like model train sets?

Unless they're in 1-9 person startups they have profoundly misunderstood their requirement and duty to scale beyond their individual output. If they want to code, be an IC. Managers need to focus on the things that only they can do, rather than the things that anyone on their team can do.