1) 🤖 AI dev tools are solving the wrong problem

This idea is brought to you by today’s sponsor, Unblocked!

Every year Stack Overflow releases their developer survey, and year after year the results remain the same: most developers feel that they are not as productive as they wish they could be. In the 2024 survey:

📚 Knowledge gaps — 53% of devs get blocked every day by knowledge gaps

🔍 Search for info — 63% spend more than 30 mins a day looking for info

💬 Help others — 49% lose more than 30 mins a day answering questions

This shows that the biggest challenge in software development isn’t writing code. It’s finding the context to know what code to write.

What you need is a way to find answers without having to search across a dozen tools or interrupt teammates.

2) 🔬 TDD is having a quiet AI renaissance

Is TDD having a quiet renaissance?!

Out of the people I know that make the most use of AI in coding, there is a surprisingly high share that writes (or makes AI write) tests first. So not exactly how people have done TDD in the past, but we can say it’s a TDD for 2025.

If you ask me, TDD has always been an obvious good workflow, but often hard to put into practice because of cognitive load. In fact, people 1) hate to write tests, and 2) hate to think too much beforehand. So, for many devs and teams that already have their own share of troubles, that’s too much to ask for.

AI is changing all of this, by attacking from both angles:

AI is very good at writing tests, removing all the *boilerplating* load from humans.

AI disproportionately rewards those who think more beforehand. Teams that are getting the best results are those that create good specs and plans and let AI implement them.

At this point, TDD becomes trivial: if 1) you need to write good specs anyway and 2) testing becomes (almost) free, you might just as well have AI create a testing plan first.

So, add that to the AGI conversation. If AI finally convinces us to write tests, it might really be smarter than us.

We did more real-world reporting on AI engineering usage in this recent article 👇

3) 🥇 Performance reviews shouldn’t be surprising

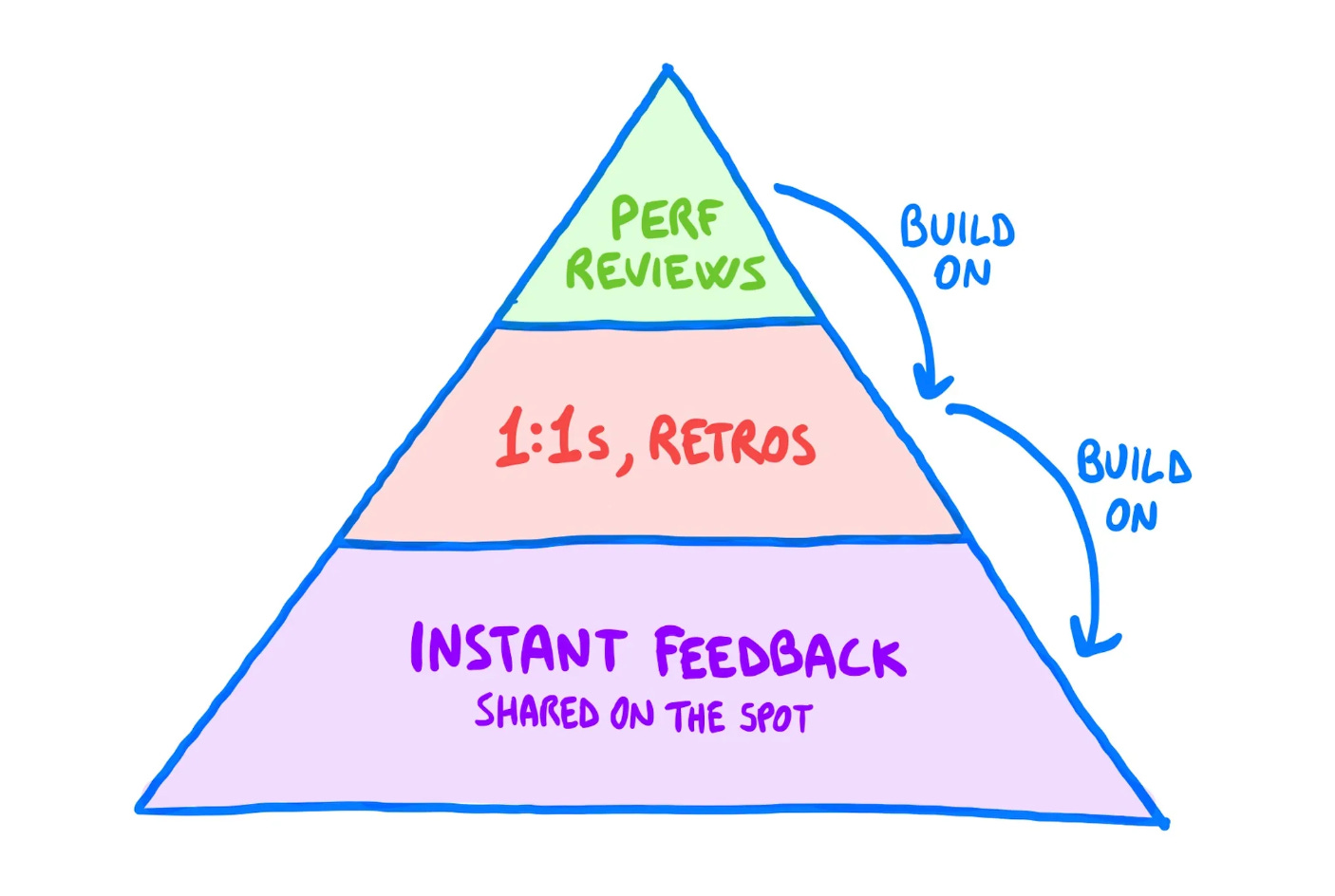

I believe performance reviews are mostly useful as a prime moment to act on feedback — by setting goals and priorities — rather than sharing it.

In fact, in healthy relationships, feedback is frequent and mainly shared 1) on the spot, and 2) in recurring venues like 1:1s. So, feedback in performance reviews should mostly ratify what has already been discussed privately.

As a rule of thumb, if people are surprised by your review, you did something wrong. We wrote a thorough guide on performance reviews here 👇

4) 🩺 Diagnostic vs improvement metrics

Despite having written about this in countless articles, one of the questions I still receive the most is: how do I actually use engineering metrics?

And my answer is invariably: it depends. It depends on many things — especially on what metrics you are using.

Metrics have characteristics that make them useful in specific contexts. Some help us see trends, while others can drive daily decisions.

So, one of the most important differences is the one between diagnostic and improvement metrics:

🩺 Diagnostic Metrics — are high-level, summary metrics that provide insights into trends over time. They are collected with lower frequency, benefit from industry benchmarks to contextualize performance, and are best used for directional or strategic decision making. Examples: DX Core 4, DORA.

🔧 Improvement Metrics – drive behavior change. They are collected with higher frequency, are focused on smaller variables, and are often in teams’ locus of control.

So, you may go to the doctor once a year and get a blood panel to look at your cholesterol, glucose, or iron levels. This is a diagnostic metric: meant to show you a high-level overview of your total health, and meant to be an input into other systems (like changing your diet to include more iron-rich foods).

From this diagnostic, more granular improvement metrics can be defined. Some people wear a Continuous Glucose Monitor to keep an eye on their blood glucose after their test indicated that they should work on improving their metabolic health. This real-time data helps them make fine-tuned decisions each day. Then, we expect to see the sum of this effort reflected in the next diagnostic measurement.

For engineering orgs, a diagnostic measurement like PR Throughput can show an overall picture of velocity, as well as contextualizing your performance through the use of benchmarks.

Orgs that want to drive velocity then need to identify improvement metrics that support this goal, such as time to first PR review.

For example, they could get a ping in their team Slack to let them know when a new PR is awaiting review, or when a PR has crossed a threshold of time without approval. These metrics are more granular and targeted, and allow the team to make in-the-moment decisions to drive improvement.

We talked about this at length, plus how to get from metrics to actionable improvements, in this recent piece written together with Laura Tacho 👇

And that’s it for today! If you are finding this newsletter valuable, consider doing any of these:

1) 🔒 Subscribe to the full version — if you aren’t already, consider becoming a paid subscriber. 1700+ engineers and managers have joined already! Learn more about the benefits of the paid plan here.

2) 📣 Advertise with us — we are always looking for great products that we can recommend to our readers. If you are interested in reaching an audience of tech executives, decision-makers, and engineers, you may want to advertise with us 👇

If you have any comments or feedback, just respond to this email!

I wish you a great week! ☀️

Luca

I think TDD or at least testing in general has a big resurgence thanks to AI.

I’ve been thinking about this myself in our apps. If we can get to higher coverage it lets us review AI pull requests a little faster and safer, knowing everything passes