How to make your tech stack AI-friendly 📏

A deep dive into strategies and mental models with Jamie Turner, founder of Convex.

The dominant conversation in software engineering today is obviously about AI.

One of the reasons why this is so discussed — other than the tech itself — is that figuring out the value, or even just the potential, of AI is incredibly hard by now.

In fact, while for new tech it is normal not to have a consensus on what to expect, the magnitude of this variance for AI is just… dumbfounding.

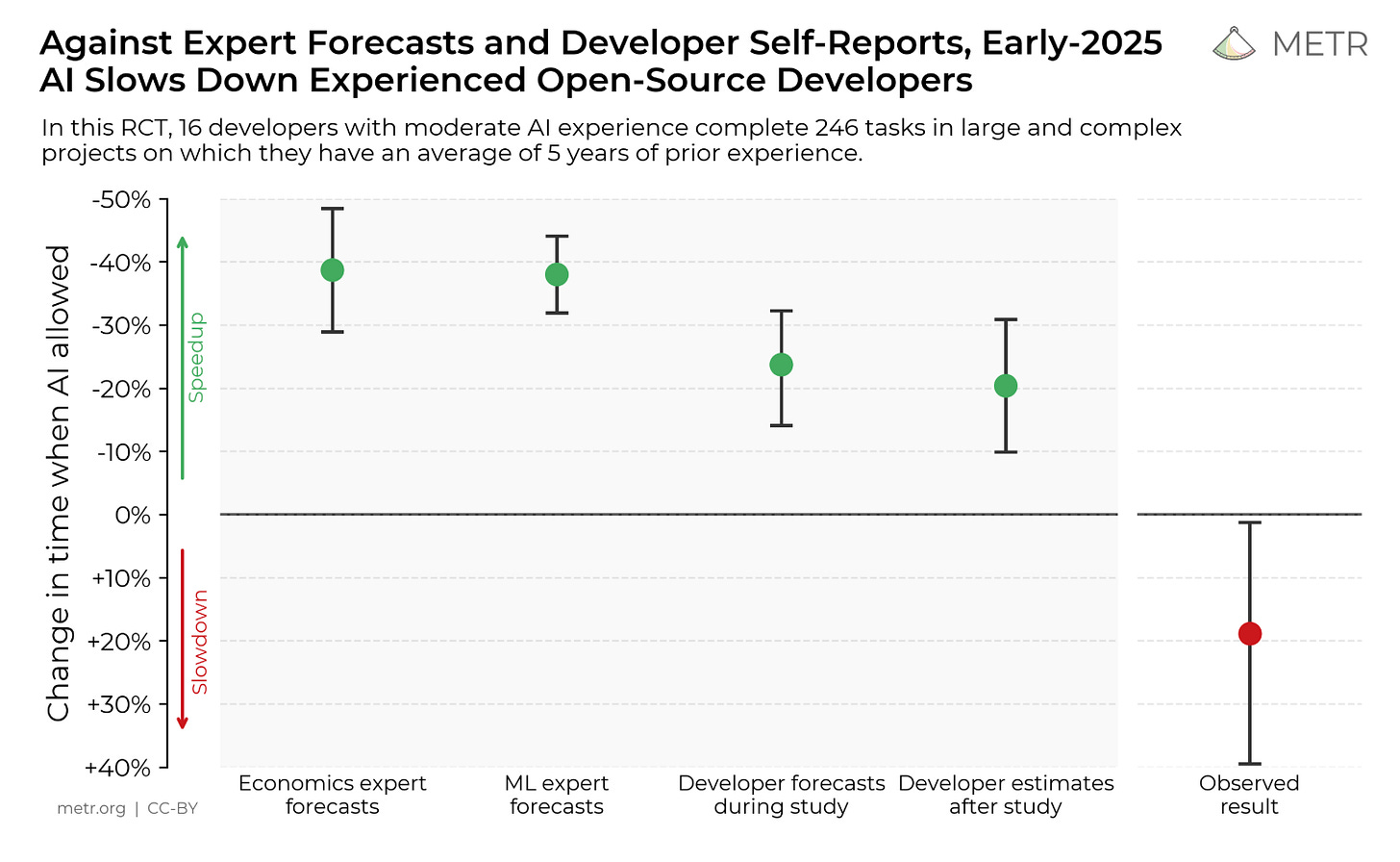

Just a couple of weeks ago, new research from METR dropped and challenged the very assumption that developers were getting any help from AI. Pretty much the opposite: they concluded that models slow down humans on realistic coding tasks.

This is not the only research that debunks AI impact: DORA basically said the same in their latest State of DevOps report.

But of course I can point to plenty of counterexamples.

To name one: when I interviewed Salvatore Sanfilippo, aka Antirez, the creator of Redis, he reported being 5x faster thanks to AI. Salvatore is extremely methodical and far from an enthusiast by default, so he should be trusted. He is also the prototype of a 10x developer: he writes some of the world’s most complex system code, largely in C. This is exactly the type of stuff where you wouldn’t expect AI to make a dent, and instead it does.

We could be skeptical about either Salvatore’s or METR’s findings, but the gulf between being slowed down and being 5x faster is just incredible, so we need to reflect on it.

It seems obvious to me that, right now, there are teams and individuals who are able to get a lot more out of AI than others, so we should understand why. A lot of this conversation focuses on workflows: prompting strategies, using this or that tool, connecting to MCPs, and so on. But there is an angle that I see rarely discussed, and that is about tech choices.

How does AI productivity depend on your tech stack? Should you take AI into account when you pick a language or a framework? How? What makes some tech a good fit for AI? And so, how should tech be designed in order to be such a good fit?

These questions to me are incredibly interesting because not only do they impact our choices today — they will impact how tech will be designed in the future.

So, is there any difference between how tech stacks should be designed for humans, vs for AI?

To explore this, I had a long chat last month with Jamie Turner, co-founder of Convex.

Convex is one of the very few companies today ambitious enough to rethink our entire tech stack from the ground up, starting with the database. They designed a new, open-source database from scratch, an entire backend framework around it, up to a full vibe-coding platform with Chef.

And they did so thanks to an enviable track record: Jamie and James, the two co-founders, were respectively Senior Director and Principal Engineer at Dropbox, where they designed from scratch one of the most daunting storage systems in the history of the Internet.

So I sat down with Jamie and we explored tech design principles to amplify AI strengths, while mitigating its weaknesses.

Here's the agenda:

🧠 AI succeeds where humans succeed — why the best AI-optimized systems double down on good design principles.

🔧 Creating constraints — how static typing, conventions, and guardrails make AI more effective.

💻 Everything as code — why configuration-as-code and language unity reduce AI confusion.

🎯 Limiting context — how component architecture and unified platforms help AI focus.

🚀 Designing for AI adoption — building evals, feedback loops, and constraints that scale with AI.

Let's dive in!