Hey, Luca here! Welcome to the Monday Ideas 💡

Every Monday I will send you an email like this with 3 short ideas about making great software, working with humans, and personal growth.

You will also receive a long-form, original article on Thursday, like the last one:

To receive all the full articles and support Refactoring, consider subscribing if you haven’t already!

p.s. you can learn more about the benefits of the paid plan here.

🐺 QA Wolf

This week I am happy to promote QA Wolf, which has developed a unique, cost-effective approach to testing that gets you to 80% automated end-to-end test coverage in just 4 months — and keeps you there.

How cost-effective? Their latest case study shows how they've helped GUIDEcx save $642k+ / year in QA, engineering, and support costs.

Their secret is a combination of in-house QA experts building your test suite in open-source Microsoft Playwright, unlimited test runs on their 100% parallel testing infrastructure, and 24-hour test maintenance.

Schedule a demo and see for yourself 👇

(p.s. and ask about their 90-day pilot!)

1) 🥔 Hot potato handoff

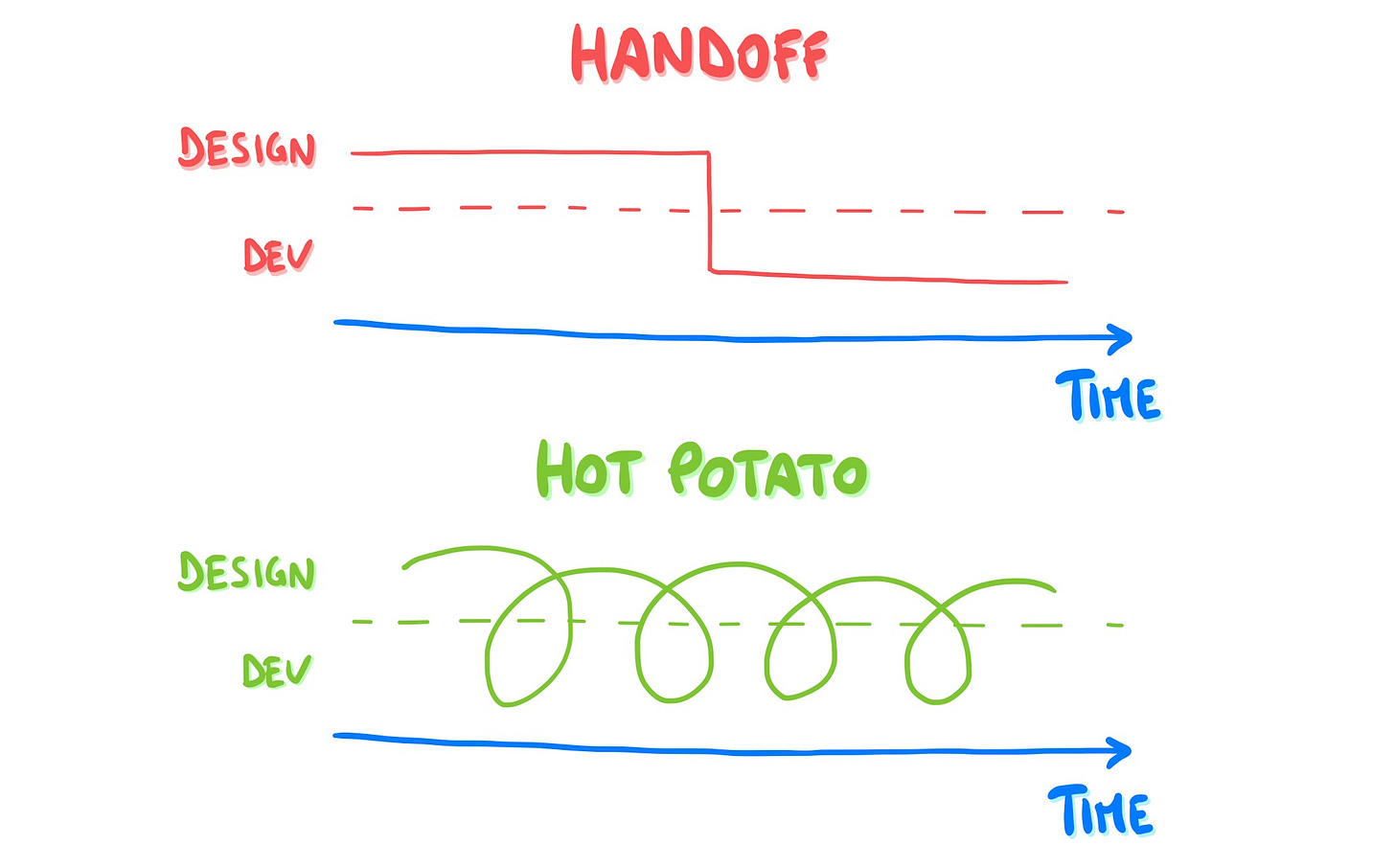

In many teams I have seen, the relationship between designers and devs only goes one way. Designers pass requirements to developers, and developers write code. That’s it.

When you think about it, the very word handoff seems to suggest this. You push the designs, and it’s done!

While this may work for small features, most projects instead benefit from continuous back and forth collaboration, in what Brad Frost calls “The Hot Potato Process”.

Ideas are frequently passed from designer to developer and back, for the entirety of the creation cycle.

Again, this is nothing new — it is iteration and collaboration, instead of "waterfall". You can take the same advice and apply it to almost any company process!

2) 🤖 AI Coding use cases

I have used AI for coding a lot in the last couple of months — mainly Github Copilot and Replit Ghostwriter (my favorite) — because I wanted to write about it in the newsletter.

My take is that it is useful but not a silver bullet. Here are 7 use cases that worked for me, and 4 that didn't:

🥱 Boilerplate / Repetitive code — AI truly excels here. Creating the stubs for new components, common config, or adding libraries (e.g. add Tailwind to your project).

🔍 Write tests — This is a specific version of #1. AI can write good tests for your code, but expect to have to add more cases yourself. However, you will never have to write another test stub in your life.

📑 Explain / document code — AI is very good at explaining code and creating docs for you. I have tried it with complex stuff and it always handled it well. Obscure code seems like a thing of the past. You can also try Mintlify or Theneo to generate automatic docs.

🛠️ Refactor small functions — For when explaining is not enough! AI is surprisingly good at splitting functions, refactoring them for better performance, and other small-scale maintenance.

🗄 SQL queries — This is an awesome use case, because SQL is no one’s favorite language. Queries look very good as long as you provide a schema. You can also try Mason for this.

⬆️ Pull Requests — AI can create automatic descriptions for PRs and scan code to propose improvements, before it gets merged. This is a no-brainer and I expect it to become commonplace. You can use Codacy Quality for this.

💻 Terminal — I am using Warp as my main terminal because, other than having a great autocomplete, it includes an AI assistant that can suggest and run commands for you. I always forget how to do shell things, so this is super useful.

❌ Bad use cases

Now, it hasn’t been all sunshine and rainbows. There have been times where I had to spend more time debugging AI-generated code than I would have probably spent writing the full thing myself.

The main problem is that AI almost never tells you “I don’t know how to do this” — it rather hallucinates and gives you the wrong answer.

So, I have learned to watch out for these situations:

Very recent stuff — If you ask for something that is relatively new (<2 years), there is a higher risk of hallucinations because 1) AI might not have seen it in the training data, and/or 2) examples of it were few.

Niche libraries — basically the same as above!

Specific library versions — AI isn’t good at keeping consistency between function signatures and the library versions you are using. Keep that in mind if you need something very specific.

Complex functions with a lot of context needed — AI is generally good at creating complex functions when they work in isolation (e.g. algorithms, math stuff, etc.) but it isn’t the best when it has to keep into account dependencies from a lot of other code.

More ideas on using AI in an engineering team 👇

3) 🤝 The three keys of delegation

In a way, delegating is like writing software. You take something you know well and communicate it in a way that is clear, unambiguous, and effective for someone else to execute.

When this someone else is a person — not a computer — there are three main things you should nail:

👁️ Purpose

🎯 Outcome

🔀 Communication

Let’s see all three:

👁️ Purpose

Purpose means: why we are doing this.

This is not only incredibly important for motivation, it also helps getting better results. In fact, when people are aligned on purpose, they can take more initiative and propose alternative routes.

🎯 Outcome

You need to describe the outcome you want for the project.

In particular, you need to explain what success looks like. Sometimes it is easier to do so via inversion, by listing all the ways something would fail instead.

You should not, however, turn this into a step-by-step guide.

It’s a fine line, but you should focus on the what, rather than the how, to create space for people to develop their own solutions.

Focus on what you want to achieve, rather than how you would do it.

🔀 Communication

This is where you explain how the person should interact with you. That’s because, again, delegation is not binary, and for any given task you may hand off only a part of what needs to be done.

This is especially true for decision making. Based on the kind of initiative you expect from the delegate, there might be various levels:

Delegate comes up with a short list of solutions — and you decide the best.

Delegate comes up with a short lists of solutions and suggests the best one — and you sign off.

Delegate decides the best solution in autonomy and goes for it.

In my experience, you can and should always go for at least 1+2.

More ideas on good delegation 👇

And that’s it for today! If you are finding this newsletter valuable, consider doing any of these:

1) ✉️ Subscribe to the newsletter — if you aren’t already, consider becoming a paid subscriber. You can learn more about the benefits of the paid plan here.

2) ❤️ Share it — Refactoring lives thanks to word of mouth. Share the article with your team or with someone to whom it might be useful!

I wish you a great week! ☀️

Luca

When using IDE-integrated AI to refactor small functions, you can also give prompts like "make into oneliner". Very handy to reduce the typing one has to do.