Web Scraping Fundamentals — use cases, tech, and ethics 🕷️

A primer about this misunderstood technique, what to use it for, and how to do it right.

In last week’s article I talked in some detail about my previous startup, Wanderio.

I explained some of our biggest challenges, including the need for integrating transport suppliers that didn’t have an API. To overcome this, we often resorted to scraping, and we did a lot of it.

Over time, our scraping skill proved useful in more, unexpected circumstances:

Some supplier’s API was not exactly 99.99% available, so we used scraping as a fallback for when it went down (because yes, the websites often kept working).

Some supplier’s API was incomplete, so some operations were only available via scraping.

Some data was only available on the public web (e.g. airports geo data), and we extracted it from there.

I might be biased from this experience, but I believe extracting web data properly is an important tool for your tech strategy.

Still, I feel this domain is largely misunderstood. When I speak with other tech leaders about scraping, I typically find three areas of concern:

🎯 Use cases — people don’t know what to use it for.

🔌 Implementation — extracting web data is perceived as overly complex and fragile.

❤️ Legal & ethical concerns — scraping has a somewhat shady reputation.

These are long-standing arguments, but today they all have solid answers: use cases are copious; responsible scraping exists, and implementation is 10x easier than it was even just a few years ago.

The role of scraping in tech grew gradually, and then suddenly, thanks to the help of a singular trend: AI.

LLMs reignited the scraping conversation because they heavily rely on web data, for two tasks:

Training — models are extensively trained on web data.

Agent behavior — models increasingly need to perform real-time navigation to answer user queries and (possibly) make actions.

So, today’s article demystifies scraping and web data collection, from the perspective of an engineering leader who wants to explore how to use it for their team, and whether they should.

We will address the three concerns above by bringing in stories, real-world data from the industry, and my own experience.

Here is the agenda:

🎯 What to use web data for — a round-up of the most popular (and sometimes surprising) use cases.

🔌 How to collect web data — the four main ways, and the best options to implement them.

❤️ How to do responsible scraping — a recap of the regulatory framework + my personal moral compass about it.

Let’s dive in!

For this article, I am partnering with Bright Data, which kindly provided industry reports, data, and insights to make the piece more grounded and useful.

I trust Bright Data because, at Wanderio, we worked with them for many years. They know the industry better than anybody else, and pioneered the concept of ethical data collection, so they were a natural partner for this piece.

Still, I will only provide my unbiased opinion on any service / tool that is mentioned, Bright Data included.

🎯 What to use web data for

In my experience, use cases for web data can be split into two categories:

➡️ Direct — specific usage that is directly connected to the value prop of your product.

⬅️ Indirect — second-order benefits, more generic and widely applicable.

Direct use cases are for products that create value by aggregating, summarizing, reviewing, and generally transforming web data.

There is plenty of them — think of:

Search engines in the travel space — like Skyscanner or Kayak.

E-commerce aggregators — similar to Google Shopping

SEO tools — like Ahrefs or Semrush

Brand monitoring tools — like Mention or Brandwatch

These are all ad-hoc use cases. You can find similar strategies by reflecting on your product and whether it can deliver more value through web data.

There is also more general usage, that works well for many companies. Here are my favorite examples:

1) Security 🔒

You can intercept emerging security risks by tapping into threat intelligence feeds (e.g. here is a great list) and industry news on the web.

Based on your business, there is a lot you can anticipate, prevent, or mitigate this way: tech vulnerabilities, phishing attempts, data breaches, stolen information, and more.

For example, financial institutions monitor dark web forums for mentions of stolen credit card numbers, allowing them to proactively alert customers and prevent fraud.

2) Market & competition insights 📈

Web data is, of course, a treasure trove of info about your market and competitors. For some businesses, this doesn’t really move the needle, while for others it’s crucial.

Think of e-commerce stores:

🏷️ Dynamic pricing — get data from competitors' websites, product pages, and deal sites to automatically inform your pricing decisions.

🚀 Track product launches — keep tabs on new product announcements that are relevant to you (e.g. new items you can sell).

😊 Analyze customer sentiment — gather and analyze customer reviews and ratings across various platforms to understand market trends.

3) Brand health & customer support 🩺

You can monitor your brand’s health through social media analysis, online reviews and ratings, gaining insights into brand perception.

The same approach can be adopted to intercept issues and provide proactive customer support. For example, that’s what many telcos do to spot issues in their networks.

4) Building AI models 🤖

Finally, AI stands out as a meta use case. You can use web data to fine-tune base models or create RAG systems for a variety of use cases, including the ones above.

We also discussed it in this previous article:

🔌 How to collect web data

There are various ways to extract web data, on the spectrum that goes from buy to build. Let’s explore the four main ones, commenting on pros and cons, and ideal use cases:

1) Ready-made datasets 🗃️

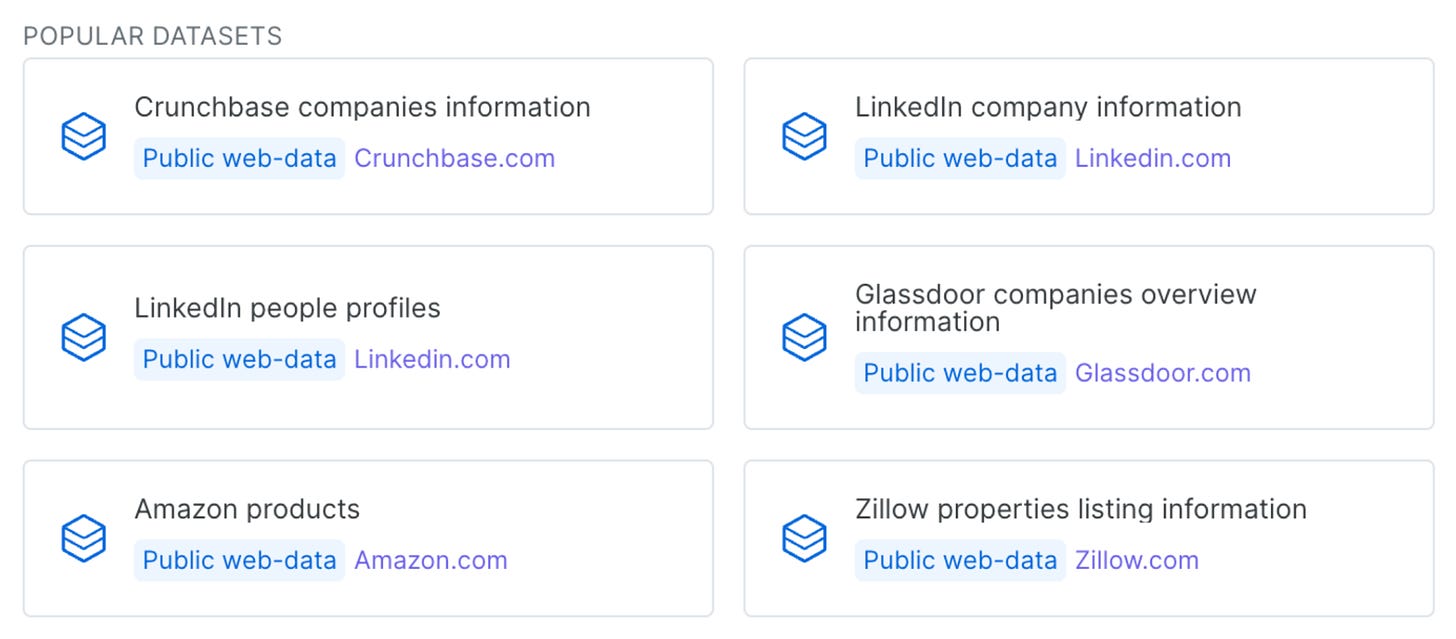

Several companies offer pre-collected datasets on various topics. Examples are Bright Data, Oxylabs, or Webz.

These datasets are pricey but also incredibly rich, and they get refreshed on a regular basis. For example, for ~$50K, you can buy:

💼 500M Linkedin profile records — to power a massive outreach campaign.

🛒 270M Amazon product records — to perform market analysis for your e-commerce, inform pricing and predict trends.

🏠 130M Zillow listings — to spot real estate trends and investment opportunities.

The main advantage of this approach, of course, is convenience: you get clean, structured data without the hassle of collecting it yourself.

Downsides are 1) cost, 2) not real-time data, and 3) only available for popular websites.

2) Third-party APIs that wrap websites 🕷️

Just like you can buy datasets, you can buy access to APIs that wrap popular websites like Amazon or Google.

This is often a confusing proposition, because at first glance you may wonder: why should I use a wrapper instead of the API of the same service?