🔍 Sema — the GenAI code monitor

Last week I had an insightful chat with Matt, CEO of Sema and long-time member of Refactoring, about the ripple effects of the use of AI in coding.

Since Copilot and ChatGPT are used by the vast majority of devs today, more and more teams are looking for ways to measure their use of AI-generated code — for M&A scenarios, due diligence, or simply as an internal health check.

Checking for AI-generated code is becoming the new checking for open source, just harder. At Sema, Matt is building the AI Code Monitor to help, and I am happy to promote it in the newsletter today.

You can sign up below for a free self-serve pilot, and as a Refactoring reader you get 2 extra free weeks 🎁

Back to this week’s ideas! 👇

1) 🔄 Manager vs Engineer feedback loops

There are countless ways in which your job changes when you go from being an IC to a manager, but one of the most peculiar is your feedback loop.

The feedback loop for your work is the way you learn whether what you are doing is good, is working, and how you can do better.

🔨 As an engineer — your feedback loop is short. You develop a feature, release it, see how it works and move on to the next thing. You work in small batches, and you have a direct understanding of how you are doing.

🎽 As a manager — your feedback loop is murky. You work on systems and processes, and measuring these 1) is hard, and 2) takes a long time. E.g. we have removed standups: are we doing better? How do we even know?

These challenges for a manager exist, in a way, by design — because the manager’s role is naturally more about the future than that of an IC.

Nevertheless, you should strive to create good feedback loops even when it’s harder to do so. In a manager’s work, this is often about qualitative data, rather than quantitative.

E.g. is the developer experience hard to measure? Run a survey. Talk with people.

In other words, while feedback for engineers is more explicit and continuous, for managers it most often requires asking for it.

This is one of the things we discussed with my friend Thiago Ghisi during our chat on the podcast.

More lessons from the podcast guests below 👇

2) ⬇️ QA vs end-to-end testing

When we talk about QA, we usually refer to a specific approach to testing — that is, testing the product like a final user. This means actually using features and workflows to figure out if something is broken.

A few months back I wrote a full piece about testing, which identified three main categories of tests: unit, integration, and end-to-end.

With some degree of simplification, QA is about end-to-end testing.

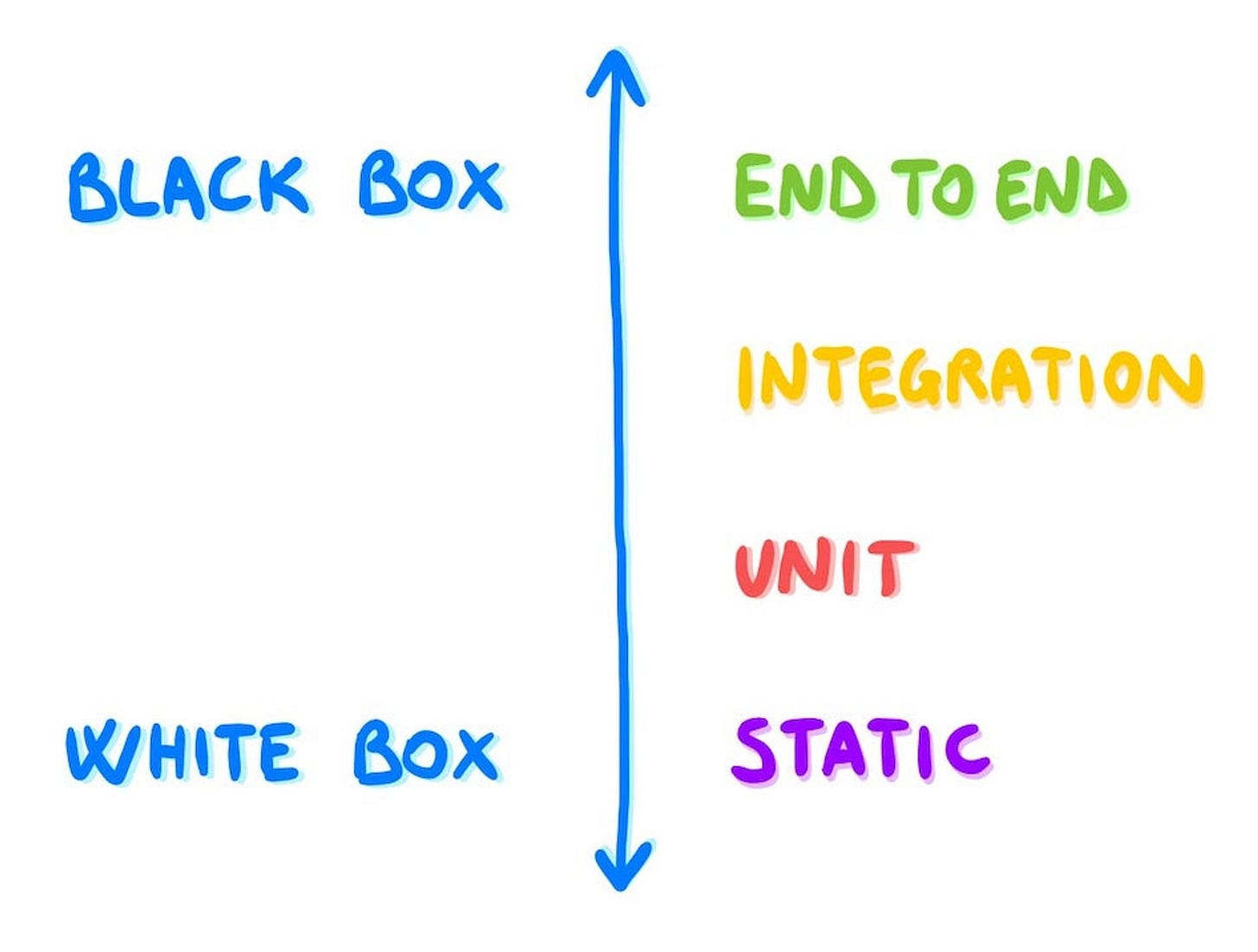

End-to-end testing is, again, testing your product from the user perspective. This is also often called black-box testing, because it can be performed without much knowledge of tech internals — as opposed to white-box testing, like unit and integration testing, that is more coupled to the specific tech.

Honestly I am not a big fan of this definition, because, to me, this looks like a continuum, more than black/white 👇

If you imagine tests as a pyramid with unit tests at the bottom and end-to-end tests at the top, then the higher you go, the more complex the workflows you're testing. And the more you move upwards, the more layers you have below, which is what makes the box darker.

In fact, each layer acts as a black box for the ones below:

Unit tests ignore the specific implementation of functions.

Integration tests ignore how workflows are performed internally.

End-to-end tests ignore pretty much everything below them.

You can find my full article about testing below 👇

3) ⚖️ Abstractions vs payoff

Most of the time, when talking about fast-moving startups, we warn against creating premature abstractions because these may be invalidated by product evolution.

Something that is true today might not be true tomorrow, so we should only invest, engineering-wise, in what feels core.

In my experience, however, that’s not enough to guarantee that an abstraction is successful. The other angle you should consider is the timeline for its payoff.

You may invest in the right abstraction, but whose payoff is too far in the future, and that still leads to failure. You may be accounting for 10x the traffic, or 10x the engineers, and don't live enough to see it.

With time and gray hair I have become more and more conservative about tech investments.

As a startup you should focus on stuff that is truly core to your value prop, and whose payoff is reasonably short-term.

And that’s it for today! If you are finding this newsletter valuable, consider doing any of these:

1) 🔒 Subscribe to the paid version — if you aren’t already, consider becoming a paid subscriber. 1500+ engineers and managers have joined already! Learn more about the benefits of the paid plan here.

2) 🍻 Read with your friends — Refactoring lives thanks to word of mouth. Share the article with your with someone who would like it, and get a free membership through the new referral program.

I wish you a great week! ☀️

Luca

I agree, QA is a spectrum! And we probably need some proportion of all of the testing types on the spectrum. In my experience, the more end-to-end the test is, the more complex it becomes to implement, so you’ll want to apply it more sparingly (to the most important parts of your app). Unit tests are easy and should probably be employed whenever possible (when useful).